Copyright © 1995-2010 MZA Associates Corporation

WaveTrain User Guide

Steve C. Coy and Boris P. Venet

Revision information

Functional specifications in this User Guide cover WaveTrain versions up to ver. 2010A. Ver. 2010A is scheduled to be released in March 2010.

CAUTION: a few subsections of the User Guide may have illustrations in which older versions of the graphical user interfaces (GUIs) appear. In such cases, the GUI features and control layout will usually be similar enough to the current version so that no confusion arises; if the layout differences are critical to the subject matter of the subsection, some commentary will be given.

Last User Guide revision date: 5 Sep 2011.

*************************************************************************

WaveTrain is a code suite that provides computer modeling of optical propagation through the turbulent atmosphere, and the modeling of associated optical imaging, beam control and adaptive optics systems. WaveTrain provides a connect-the-blocks visual programming environment in which the user can assemble beam lines, control loops, and complete system models, including closed-loop adaptive optics (AO) systems. WaveTrain also provides graphical interfaces for setting up adaptive optics geometries and turbulence profiles, and a spreadsheet-style interface for setting up and executing parameter studies. The generated output, comprising imagery, complex-field quantities, control signals and the like, can be inspected in a WaveTrain viewer, or can be loaded into MatlabTM or other environments for further analysis and post processing.

This User Guide is designed to serve as a tutorial introduction to WaveTrain for new users, and a reference for more experienced users. The User Guide attempts to be as comprehensive as possible in documenting the scope, usage rules, and assumptions of the WaveTrain code. In doing so, we may occasionally digress into short tutorials on topics in optical propagation theory and adaptive optics, but for detailed theory background we refer the reader to the standard literature. WaveTrain is a complex code, and its pieces have been constructed and assembled by numerous contributors at MZA Associates (and some contributors elsewhere). Generation of documentation is an ongoing effort, and the elements of WaveTrain are not all documented at the same level of detail. When users have questions regarding WaveTrain, we ask them to proceed as follows. First, search the present User Guide, the MZA web-site update material, the index to all WaveTrain documents, and the individual module documentation in the WaveTrain Components and Effects Library. If this fails to resolve the question, feel free to contact MZA by email or phone.

WaveTrain is built atop tempus, a general-purpose simulation tool also created by MZA Associates. tempus provides the visual programming environment, the software architecture, and all the basic mechanisms that support model-building and simulation. WaveTrain adds a component library for modeling optical systems and effects, and several application-specific GUI components. The latter comprise a tool for setting up wavefront sensor and deformable mirror geometries, a tool for specifying turbulence distribution along an optical propagation path, and a tool for setting up and executing parameter studies. The GUI tool for wavefront-sensor and DM configuration also has associated Matlab routines for a variety of AO tasks, such as computing reconstructor matrices. The GUI tool for specifying turbulence distributions also performs a variety of useful calculations of integrated turbulence quantities based on theoretical formulas; these can be used as a starting point for initially estimating or bounding answers to be obtained from the wave-optics simulation.

To get a quick idea of what WaveTrain can do and how one can use it, start with our quick tour (this should take less than 10 minutes).

For a more detailed introduction (a few hours), work through one or

more of our step-by-step tutorials, particularly the Guide

section on Assembling and running a WaveTrain

model - Tutorial. After these introductory exercises, there will

undoubtedly still be many details that are fuzzy to the new user. We

suggest that the best procedure at that

point is:

(a) skim the rest of this User Guide to get a sense of the organization

and contents;

(b) attempt construction of original simple systems, based on what you

have learned from the "Assembling" section and auxiliary

tutorials;

(c) as conceptual and procedural questions then arise, dive into the

remaining detailed documentation chapters of this User Guide as needed.

By way of preview, we mention the following details

chapters:

(*) For physics-oriented issues and usage details regarding important

specific WaveTrain library systems, users should consult the chapter

Modeling details.

(*) For details regarding data entry in the two editor windows, users

should consult the chapter Data entry in subsystem

parameters and inputs, and in the Run Set Editor.

(*) For details regarding TrfView, trf files (recorded outputs) and the

extraction of trf

data, users should consult the chapter Inspecting and

post-processing WaveTrain output: *.trf files, TrfView, and Matlab.

(*) For details regarding the construction of user-defined WaveTrain

subsystems, users should consult the chapter

Creating user-defined WaveTrain components.

WaveTrain documentation and updates on the Web

Improvement of the WaveTrain documentation is an ongoing

project. For the most recent version of the WaveTrain User Guide and

allied documentation, the user should refer to MZA's web site:

http://www.mza.com/Default.aspx#productstab-wtdocs,

then click on "WaveTrain User Guide" under the Index.

Here the

user will always find the most recent version of the User Guide, as well as

material that has not yet found its way into the general Guide. The latter

material may include auxiliary

documentation on special topics, extra tutorials not accessible through the User

Guide, and WaveTrain bug notes, discussions, and patches.

In addition, some auxiliary documentation relevant to WaveTrain may be found

under the "tempus" heading at MZA's web site: for a list of those documents,

see:

http://www.mza.com/Default.aspx#productstab-tempus.

Index to all WaveTrain Documents

J. Goodman, Statistical Optics, Ch. 8, Wiley-Interscience, 1985

V. Tatarski, Wave Propagation in a Turbulent Medium, McGraw-Hill, New York, 1961

A. Ishimaru, Wave Propagation and Scattering in Random Media, Chs. 16-20, IEEE Press/Oxford U. Press, reissued 1997 (original pub. Academic Press, 1978)

R. Tyson, Principles of Adaptive Optics, Academic Press, New York, 1991

M.C. Roggeman and B. Welsh, Imaging through Turbulence, CRC Press, 1996.

J. Hardy, Adaptive Optics for Astronomical Telescopes, Oxford University Press, 1998

L. C. Andrews and R.L. Phillips, Laser Beam Propagation through Random Media, SPIE Optical Engineering Press, 1998.

R.E. Hufnagel, "Propagation through Atmospheric Turbulence", in The Infrared Handbook, Ch. 6, Environmental Research Institute of Michigan and Office of Naval Research, rev. ed., 1985.

R.R. Beland, "Propagation through Atmospheric Optical Turbulence", in Atmospheric Propagation of Radiation, vol. 2 of The Infrared and Electro-Optical Systems Handbook, SPIE Press, 1993.

User Guide Contents

WaveTrain documentation and updates on the web

Index to all WaveTrain documents

WaveTrain step-by-step tutorials

Assembling and running a WaveTrain model - Tutorial

Create a new WaveTrain system model

The WaveTrain component libraries

Copy components from one System Editor window to another

Saving systems, opening existing systems

Component parameters, inputs, and outputs

Display/hide graphical elements

Create a new run set for the WaveTrain system

Further WaveTrain details - next steps

Connecting WaveTrain components

Physical units and nomenclature

Spatial coordinates and direction nomenclature

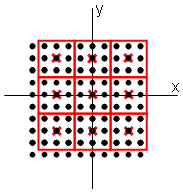

Modeling of optical systems in "object space"

Sign and phasor conventions for tilt, focus and general OPD

Sensor timing, CW sources and pulsed sources

Transverse (x,y) displacement and motion (TransverseVelocity and Slew)

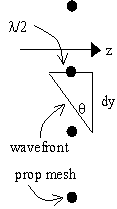

Transverse displacement and size of propagation mesh

Transverse displacement and size of phase screens

Longitudinal (z) displacement and motion

Optical propagators in WaveTrain

Choosing mesh settings for optical propagation

Setting up Fresnel propagations

Using the PropagationController

Using atmospheric turbulence models

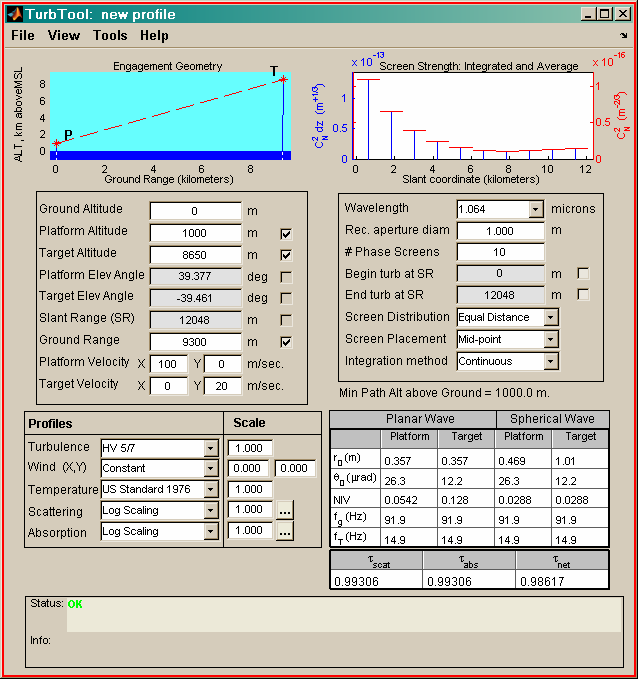

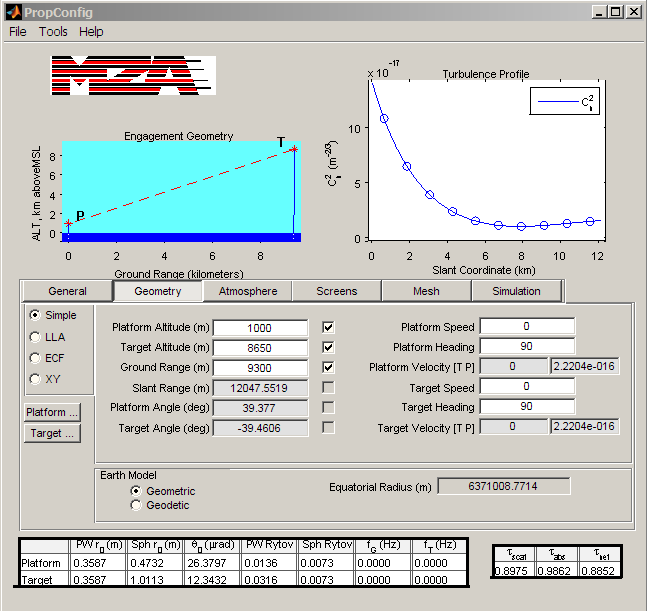

Atmospheric modeling using TurbTool or PropConfig

Using atmospheric thermal blooming models

Basic sensor modules: TargetBoard, SimpleFieldSensor and Camera

Spatially integrating WaveTrain sensor outputs

Interference of polychromatic fields

Splitting and combining optical paths

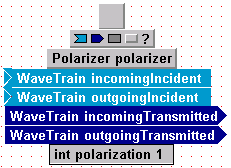

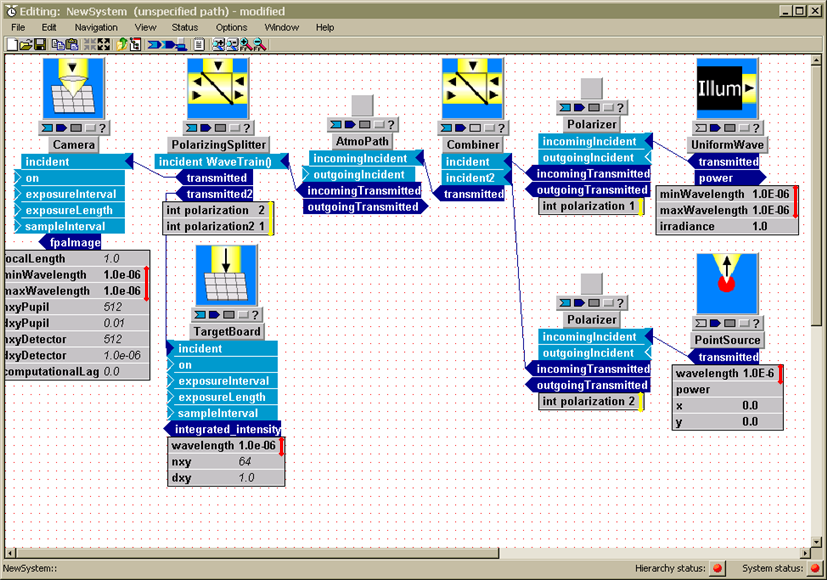

Using Polarizers to separate light from different sources

Adaptive optics models (wavefront sensors, deformable mirrors, tilt trackers)

Optically-rough reflectors, and modeling of speckle

Components for data-type conversion

How to use spatial filters and absorbing boundaries

Using WaveHolder to avoid performing redundant propagations

Data entry in subsystem parameters and inputs, and in the Run Set Editor

C-language syntax for expressions and basic math functions

Procedures for entering vectors, arrays and "Grids"

Procedures for modifying vectors, arrays, and "Grids"

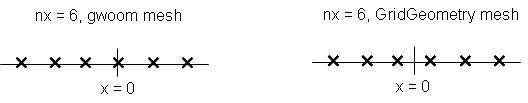

The functions "gwoom" and "GridGeometry"

Miscellaneous special functions and operators

Status bulbs and status checking

Miscellaneous rules and tips for Run Set and System Editors

Inspecting and post-processing WaveTrain output: *. trf files, TrfView, and Matlab

Loading trf data into Matlab without TrfView

Key commands for working with trf data in Matlab

Creating user-defined WaveTrain components

Creating a new component by composing existing library modules

Creating a new atomic component from a Matlab m-file (m-system)

Creating a new atomic component - general

How WaveTrain works at the source code level

WaveTrain "starter systems" for constructing new atomic systems

Using WaveTrain models to gain understanding of the modeled systems

How to set up and execute parameter studies

Averaging over stochastic effects

A quick tour of WaveTrain

Modeling an optical propagation system in WaveTrain is

essentially a four-step process. The steps are:

(1) Assemble the WaveTrain model, by copying optical and processing

components from the WaveTrain component libraries, and

connecting the inputs and outputs of the components.

(2) Set the numerical parameters of all components, and the simulation

timing parameters.

(3) Run the simulation.

(4) Inspect the WaveTrain simulation outputs, and post-process as needed.

Steps (1)-(3), and parts of step (4), are carried out within WaveTrain's visual

programming environment. Arbitrary post-processing in step (4) requires

the use of an auxiliary visualization and computation environment, such as

Matlab™.

Step 1 - Assembling and connecting the WaveTrain components:

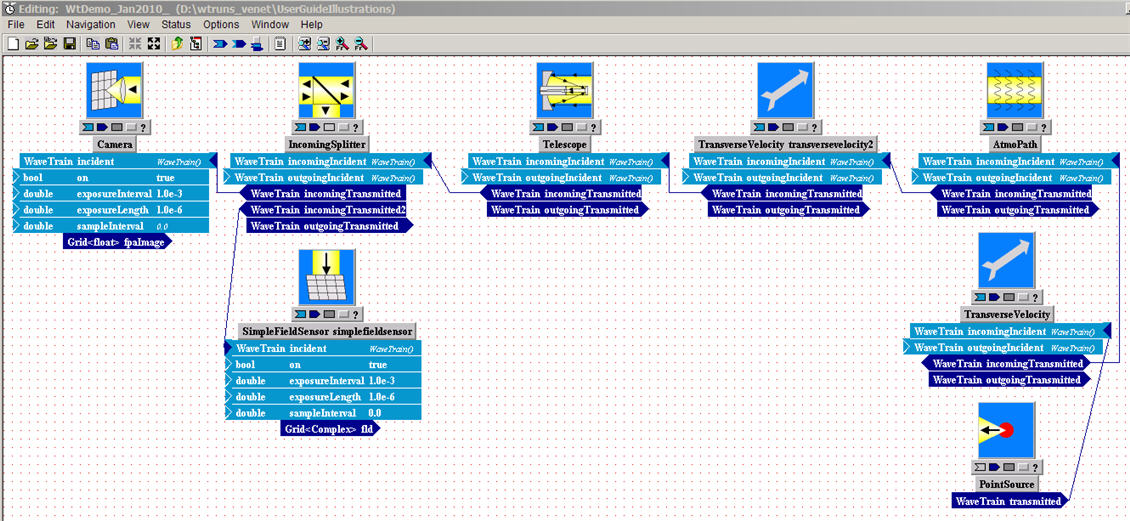

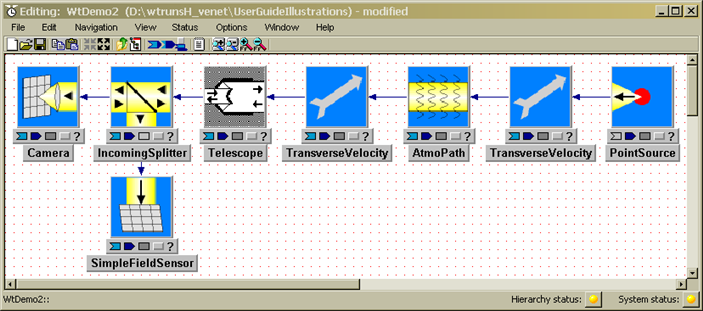

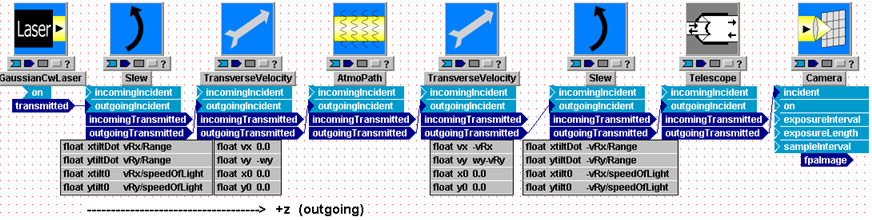

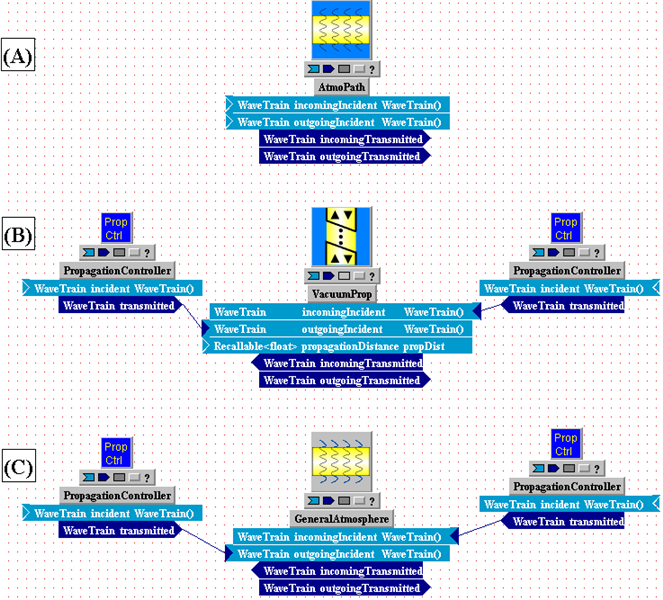

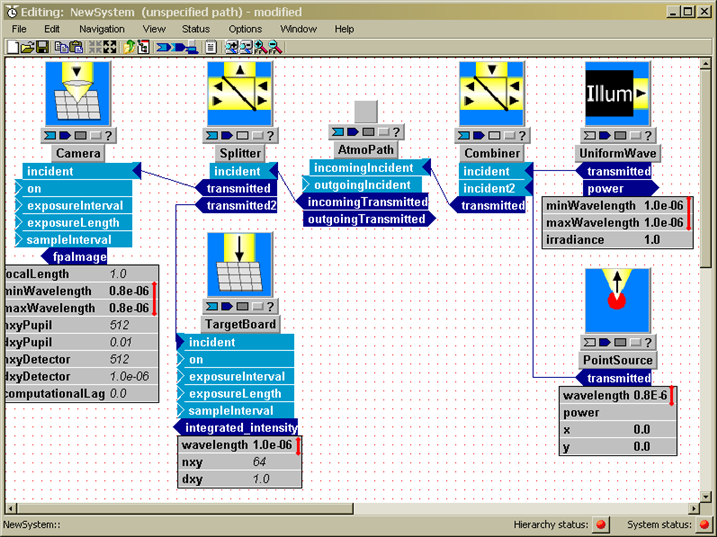

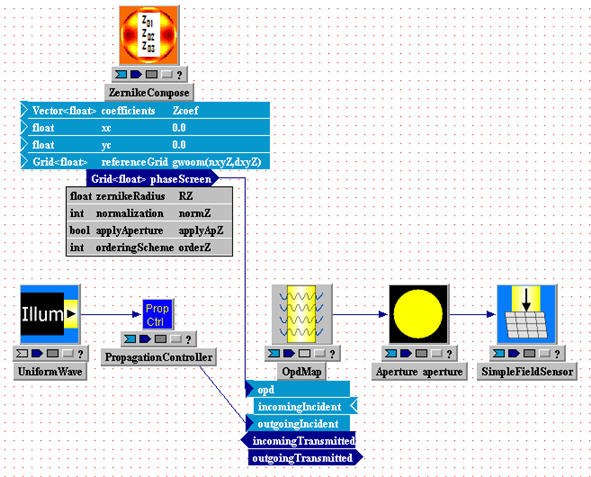

For example, suppose we want to look at the optical effects of propagation through atmospheric turbulence. We might assemble a basic model like that shown below:

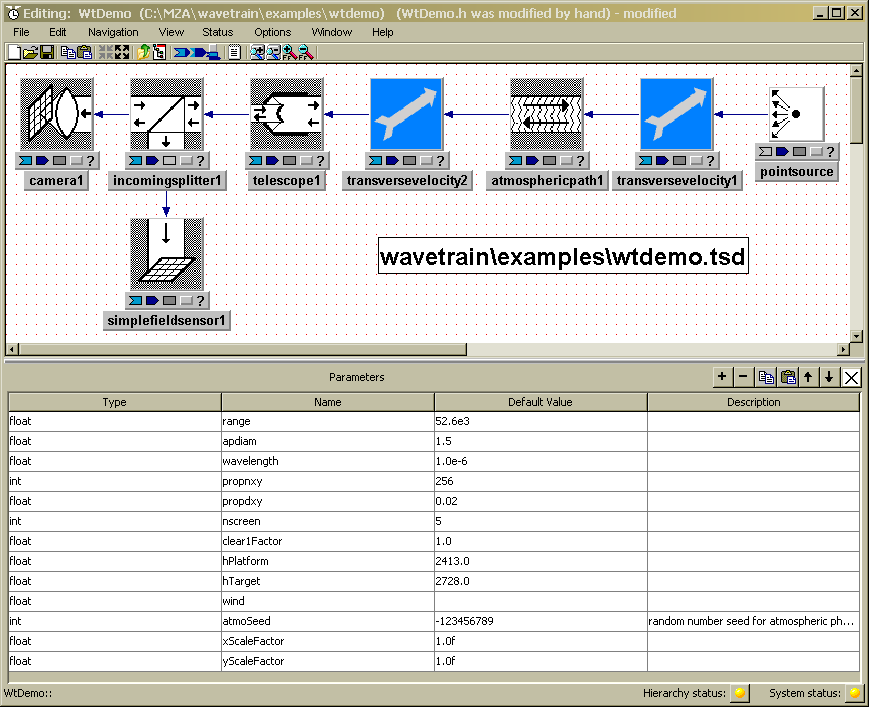

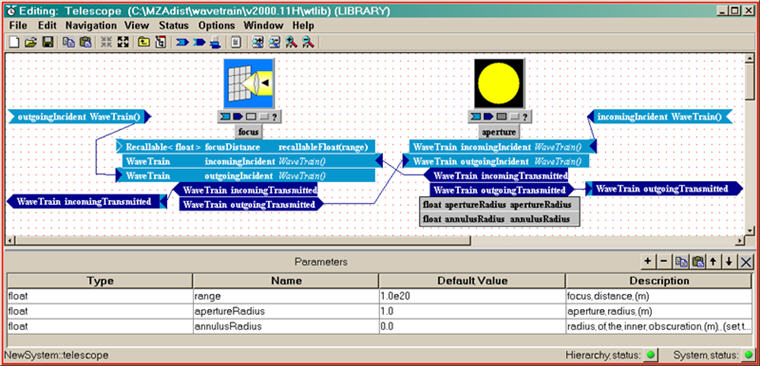

Figure: WaveTrain's System Editor, showing the WtDemo propagation model

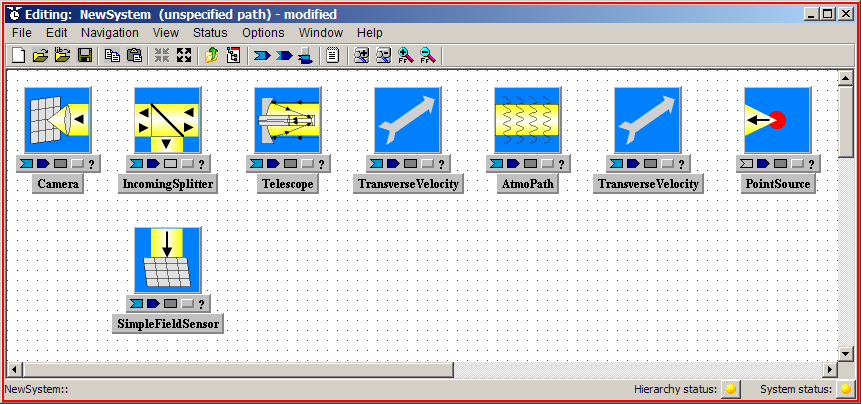

The window in the above picture is called the System Editor window (equivalently, the Block Diagram Editor), and is used for assembling the optical model from library components. The present model consists of eight components (also called subsystems, or modules) connected together. All of the subsystems shown can be found in the WaveTrain component library, and all of the connections in this particular model represent optical interfaces. (Connections that correspond to electronic or general data signals are also available). Starting at the far right, we have a Point Source, a TransverseVelocity, an AtmoPath, another TransverseVelocity, a Telescope, and an IncomingSplitter, which splits the incoming light and sends part to a Camera and part to a SimpleFieldSensor. The PointSource represents an idealized point source, radiating uniformly in all directions. The two TransverseVelocities can be used to model source and detector transverse motion, and/or a true wind velocity. The AtmosphericPath module is a complex module that represents two processes: (a) the optical effects of turbulence using multiple discrete phase screens distributed along the propagation path, and (b) the diffractive propagation of light along the propagation path. By assigning screen motions, AtmosphericPath can also model transverse wind velocities that vary spatially along the propagation path. In between the phase screens the optical wavefronts are propagated using a two-step FFT propagator. The Telescope applies an aperture and a focus adjustment to the incoming light. The IncomingSplitter duplicates the incident beam and sends it out into two branches. The SimpleFieldSensor records the complex field - amplitude and phase - at the aperture plane. The.Camera brings the light to a focal plane (using a Discrete Fourier Transform) and records the intensity pattern in that focal plane.

This relatively simple model can be assembled by a novice user in an hour or so - see our step-by-step tutorials - and it is not a toy; it can do serious calculations.

Step 2 - Setting the numerical parameters of all components:

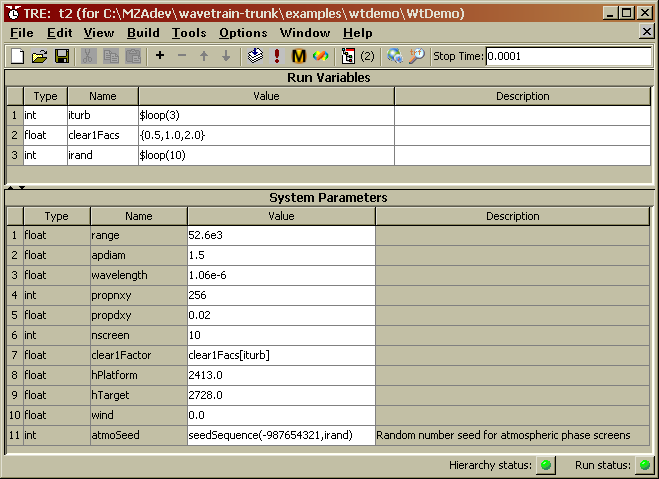

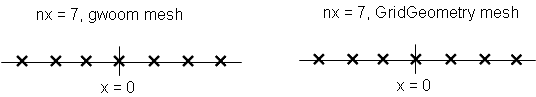

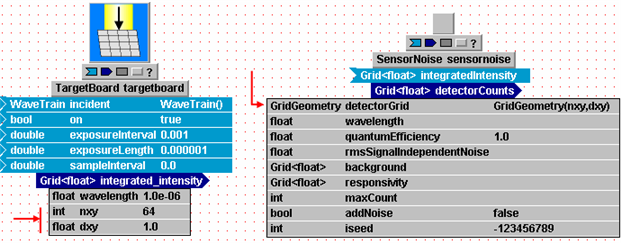

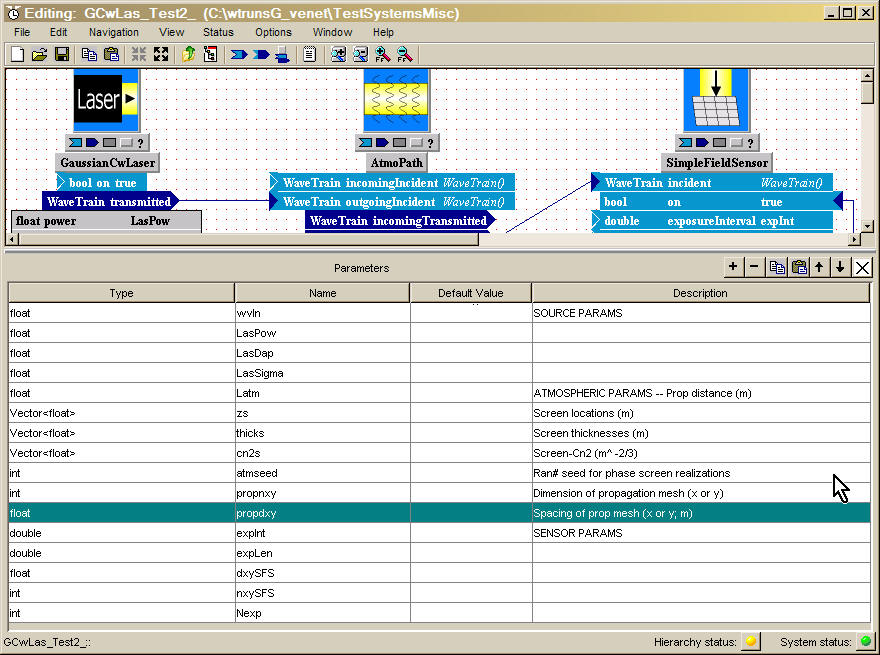

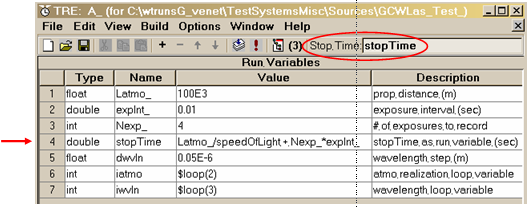

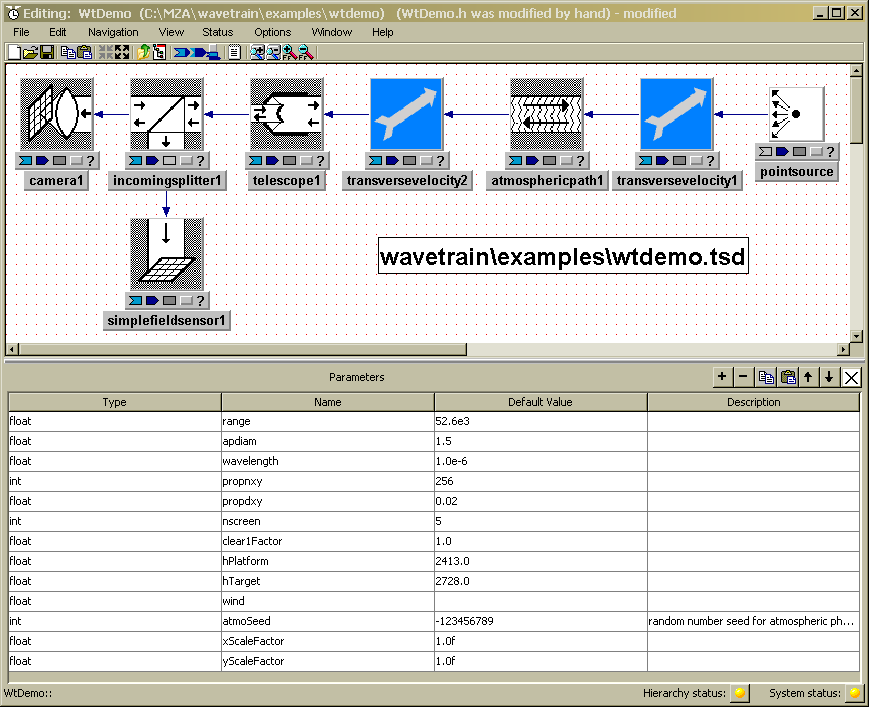

After assembling and connecting the system components, the user must assign all the necessary parameter values. These include source powers, aperture diameters, propagation distances, propagation mesh dimensions, turbulence phase screen parameters, sensor spatial and timing parameters, etc. Setting all these parameter values is done partly in the System Editor window illustrated above, and partly in a second editor window, called the Run Set Editor. A sample Run Set window for the preceding system is shown below (in the window title, "TRE" stands for the full name "tempus Run Set Editor"):

Figure: WaveTrain's Run Set Editor - a run set for the WtDemo propagation model

Step 3 - Running the simulation:

After specifying all the parameter values, the user initiates execution of the simulation with a few button clicks in the Run Set Editor. Upon completion of the execution, WaveTrain's output - a combination of sensor images, complex field data, and/or electrical signal data - is stored to data files in a form that can be conveniently accessed for visualization and post-processing.

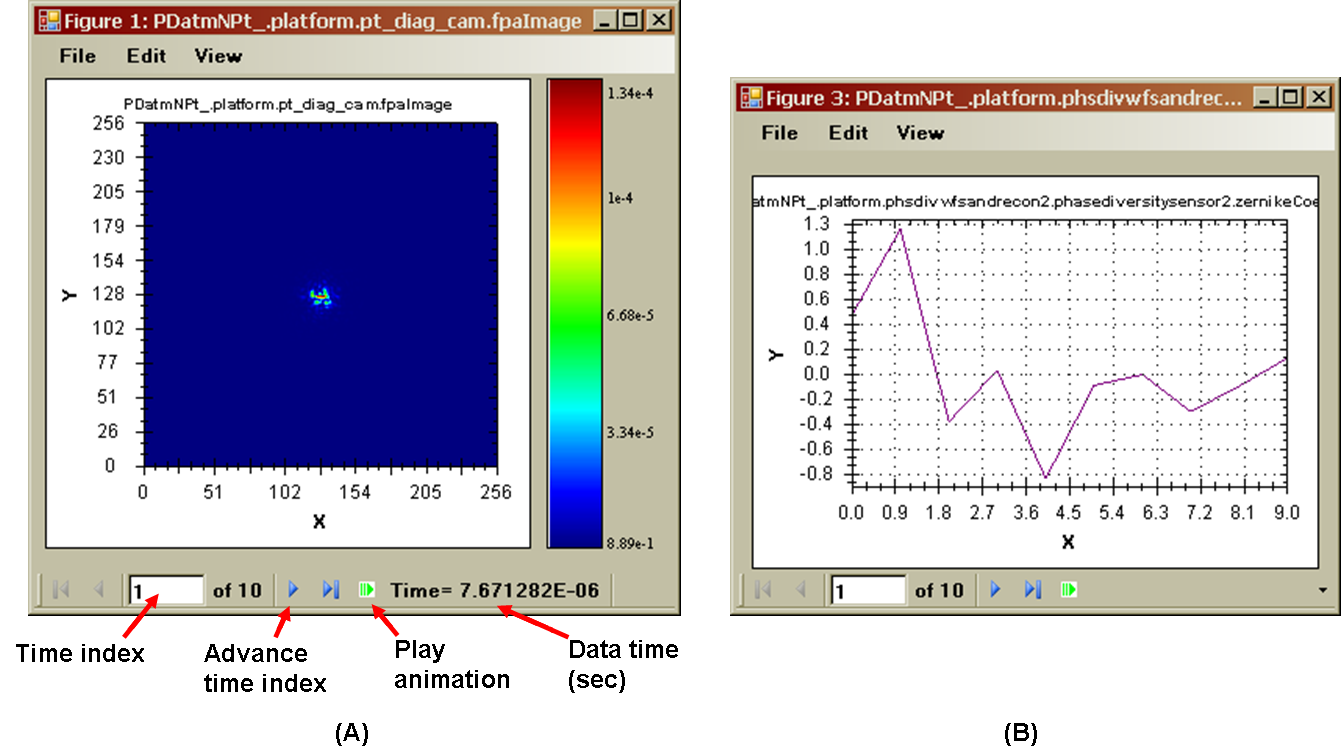

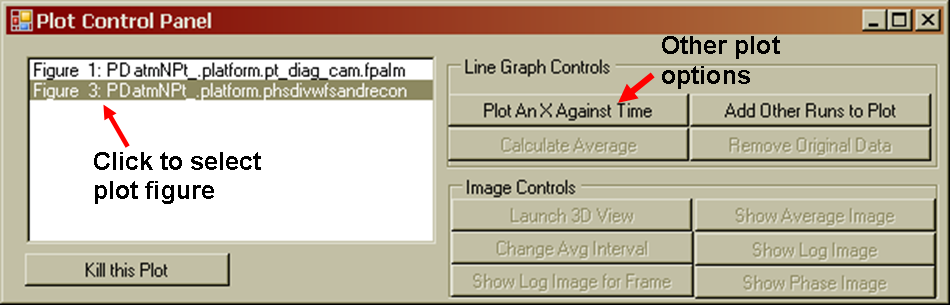

Step 4 - Inspecting and post-processing the WaveTrain outputs:

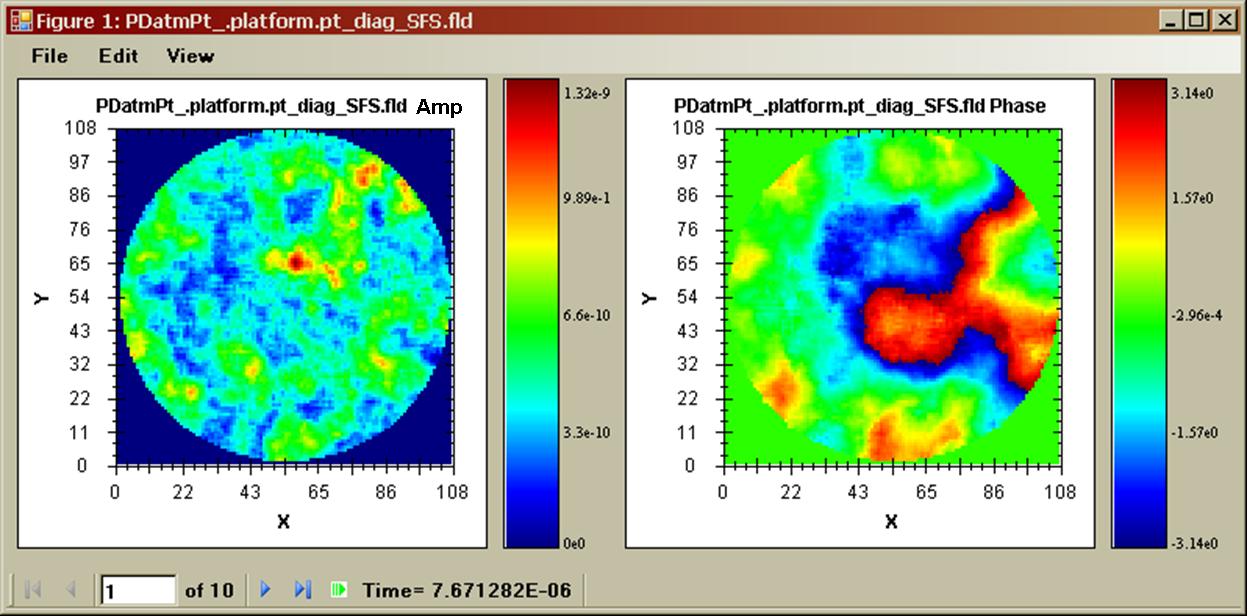

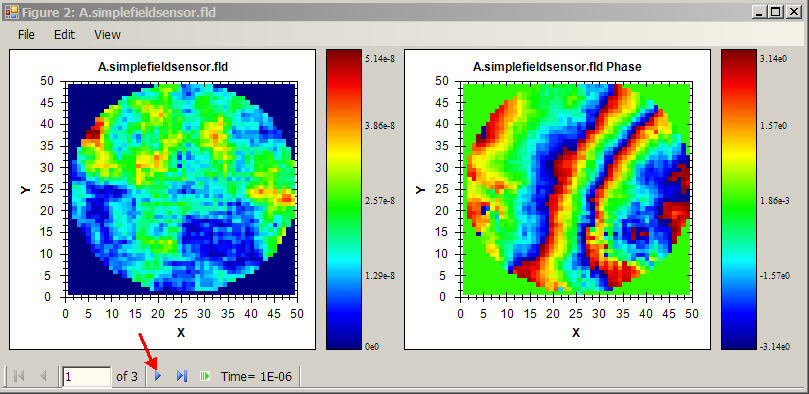

Visualization and limited post-processing of outputs can be accomplished using the TrfView viewer that is part of WaveTrain suite. Typical outputs might be the instantaneous wave amplitude and phase maps in the "telescope1" pupil plane of the above WtDemo model. These particular outputs are based on the complex optical field sensed by the "simplefieldsensor1" component in the model. Sample outputs as displayed in TrfView are shown in the following figure:

Figure: WaveTrain sample outputs - amplitude (left), and phase (right) maps in the telescope pupil of the WtDemo system, as displayed in WaveTrain's TrfView viewer

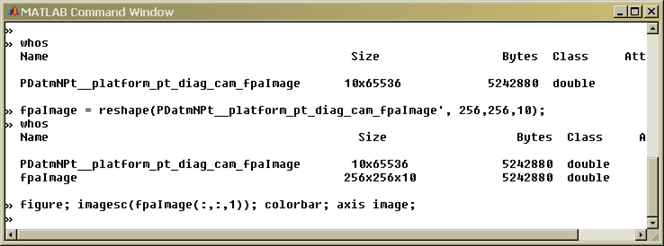

Completely general visualization and post-processing can be accomplished using Wavetrain's Matlab

™ interface. WaveTrain can be used with or without Matlab, but WaveTrain provides a full-featured Matlab interface. Not only can WaveTrain output data be conveniently imported into Matlab, but also complete WaveTrain system models can be created and executed from within Matlab, or converted into "S-functions" for use within Simulink, which is Matlab's general purpose simulation environment. Additionally, Matlab m-files can be automatically converted into WaveTrain components, so that Matlab users have a simple way of creating custom WaveTrain components to supplement the WaveTrain library.By varying the parameters of the WtDemo model illustrated in the above figures, the user can investigate a wide variety of issues fundamental to the problem of optical propagation through turbulence. These issues include the statistics characterizing the spatial and temporal variation of the amplitude and phase effects, in both pupil and image planes of a receiver, and the way those statistics changes when the distribution of turbulence along the path is changed.

Auxiliary tools for defining input parameter specifications:

In addition to the fundamental System Editor and Run Set Editor, the WaveTrain suite includes several other graphical user interfaces (GUIs). The purpose of these auxiliary tools is to assist in setting the numerical inputs of some of the WaveTrain components, particularly the more complicated modules that deal with atmospheric turbulence and adaptive-optics components. For example, there is a Matlab GUI for setting up deformable mirror and wavefront sensor geometries. Also, there is a Matlab GUI that facilitates turbulence strength and atmospheric specifications, and allows the evaluation of certain analytical formulas that estimate key integrated-turbulence quantities (such as scintillation variance and Fried's r0).

Scope and development of WaveTrain:

WaveTrain is designed to be useful to scientists, engineers, teachers, and students engaged in the design and development of advanced optical systems, or in the study of propagation through turbulence and associated adaptive optical systems. It is equally well-suited for simple models, like the example shown above, for intermediate complexity models such as are typically used in concept exploration, and for the kind of detailed engineering models necessary for accurate performance prediction, design refinement, and trouble-shooting. We are continually adding new components and features, and making improvements, often in response to customer feedback. If you need or want a feature that we don't yet offer, please let us know. Alternatively, WaveTrain is designed to be extensible, so you can create your own components to supplement the WaveTrain library, as described in creating your own WaveTrain components.

WaveTrain step-by-step tutorials

The User Guide chapter immediately following the present one provides an introductory tutorial to assembling and running WaveTrain models. That tutorial is a compromise between constructing the simplest possible first system, and constructing one that is still fairly simple yet will be physically interesting to most WaveTrain users. A new user can work through that tutorial in a few hours.

We also provide here several links to other tutorial briefings that have been

used by MZA personnel when leading WaveTrain training sessions or short courses.

Slight drawbacks of those documents may be that: (i) they may use some

illustrations or procedures from older versions of WaveTrain, resulting in

occasional confusion, (ii) since the briefings were meant to be used in a

trainer-led class, some explanatory sentences may be missing from the

briefing charts. Despite those warnings, users may still find the extra

tutorials useful. These tutorial briefings may be found on the

WaveTrain

documentation page on MZA's website. These extra tutorials are

accessible from the following links on that web page; roughly in order of

complexity, they are

(a) "WaveTrain

User's Quick Start"

(b) "WaveTrain Tutorial (March 2008)"

(c) "The Whiteley Tutorial model".

Note that the most recent version of the present WaveTrain User Guide may also be found on the same web page, at the link WaveTrain User's Guide.

WaveTrain Examples Library

After working through the introductory tutorial, and in conjunction with the construction of original WaveTrain systems, users may find it useful to inspect some of the example systems in the WaveTrain Examples Library. The examples library comprises WaveTrain systems and run sets which are delivered with WaveTrain. The purpose of the examples is to provide working systems which involve application of WaveTrain concepts and to give users a start on building more complex systems. The example systems are distributed with standard WaveTrain installations, and may be found in the subdirectory "wavetrain\examples\" of the WaveTrain installation directory. (Disclaimer: it should not be assumed that the examples provided there are fully valid for any particular user application).

Assembling and running a WaveTrain model - Tutorial

We suggest that new users begin their WaveTrain study by working through the

following tutorial. The tutorial has the following goals:

(a) To lead a new user step by step through the construction and

execution of a WaveTrain simulation.

(b) To introduce a new user to several of the key WaveTrain components

that are needed for most WaveTrain systems.

(c) To introduce the user to some of the specialized nomenclature that is

used in the WaveTrain program.

(d) To introduce the user to TrfView, which is WaveTrain's utility for

quick inspection and plotting of simulation results.

Create a new WaveTrain system model

To start the WaveTrain user interface, double-click the Wavetrain desktop

shortcut, or use the typical Windows menu sequence, 'Start - Programs - MZA

Associates Corp - WaveTrain'. The desktop shortcut and start group should

have been generated during the WaveTrain installation process.

Starting WaveTrain as above simply brings up a small toolbar with the title "TVE". TVE stands for Tempus Visual Editor, which is the overall graphical interface program through which the user interacts with WaveTrain. A picture of the TVE toolbar is shown at right.

To begin the setup process for a new system, go to the TVE toolbar and left-click on the System Editor button. This brings up a blank System Editor window, as shown below:

Figure: Blank System Editor window

--------------------

For WaveTrain Ver. 2010A and later:

In the File menu of the editor window, execute the sequence

File - New - New System.

This changes the window title to "NewSystem", instead of "no system loaded" as

shown in the above illustration. The window is now ready to accept the

insertion of components to build a new system.

--------------------

--------------------

For WaveTrain Ver. 2009A and earlier:

The blank Sytem Editor window opens already in the "NewSystem"

state, ready for insertion of components to build a new system.

--------------------

At this point, you are ready to begin creation of a WaveTrain system by copying the components you desire from the WaveTrain libraries into the NewSystem window. There are several methods for inserting library components into a new system:

(1) You can open a second System Editor window, and in that window open an iconic representation of the WaveTrain library. Then you can copy and paste individual components from the library into your new system. This is a good method for comprehensively browsing the available library systems, particularly when you are still unfamiliar with the library contents.

(2) It is

also possible to insert components by

(a) inserting them directly from a library directory

listing, or

(b) copying and pasting components or groups of

components from existing systems.

We will discus some of these alternate methods later in the present chapter.

In the remainder of this tutorial, you will use the basic method (1) to create a new system.

The WaveTrain component libraries

You will now insert components into a new system, using the basic Method (1) above.

--------------------

For WaveTrain Ver. 2010A and later:

(1) Start WaveTrain, and press the TVE toolbar System Editor button

(already done in the above subsection).

(2) In the File menu of the editor window, execute the sequence

File - Open - Master Library.

This causes a second, separate System Editor window to open, and after a few

seconds of loading time you will see a display of icons as in the following

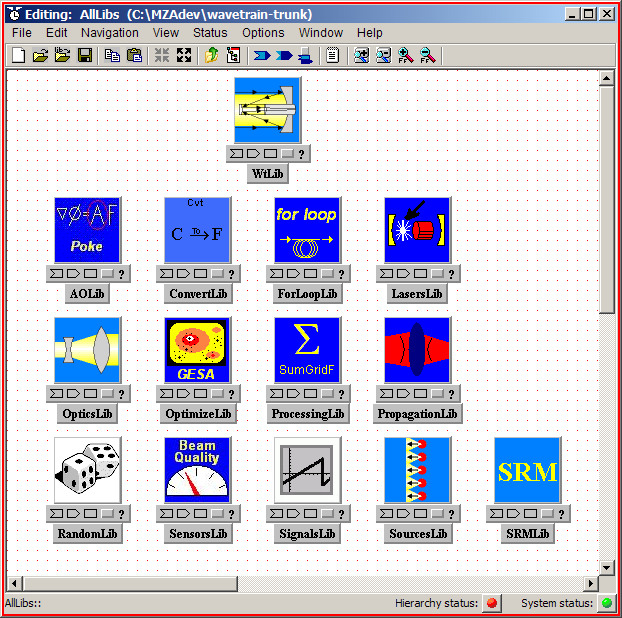

screen snapshot. These icons comprise the WaveTrain "component libraries":

Figure: All the WaveTrain component libraries, in WaveTrain Ver. 2008 and later

(3) Now double-click on the icon labeled "

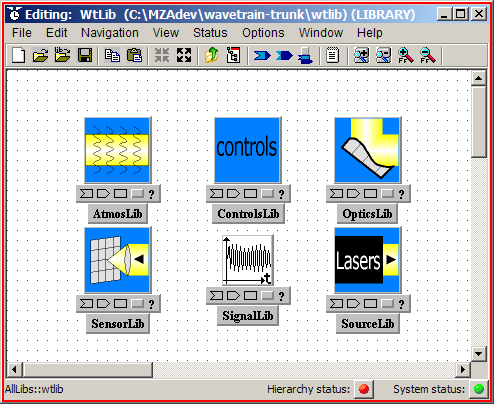

WtLib" (top row in the above figure). You will then see a set of six icons representing "sub-libraries", as shown in the following figure:

Figure: Contents of the WtLib library

--------------------

--------------------

For WaveTrain Ver. 2009A back to Ver. 2008:

(1) Start WaveTrain, and press the TVE toolbar System Editor button

(already done in the above subsection).

(2) Press the TVE toolbar System Editor button again to open a second System

Editor window.

(3) In the second System Editor window, go to the menu bar and execute the

sequence File - Open - Browse.

(4) Navigate to your wavetrain directory.

(5) Open the file AllLibs.tsd.

After a few seconds of loading time, you will see a display of icons as in

the previous figure entitled "All the WaveTrain component libraries ...". These icons comprise the WaveTrain

"component libraries".

(6) Now double-click on the icon labeled "

--------------------

--------------------

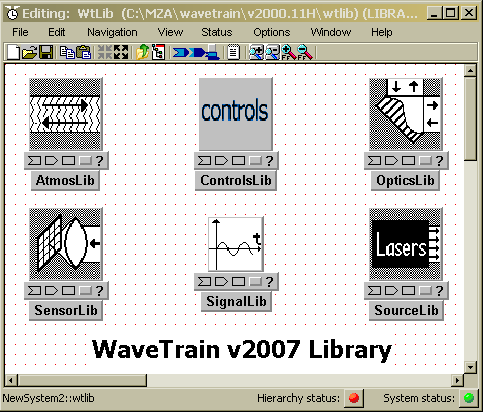

For WaveTrain Ver. 2007 and earlier:

(1) Start WaveTrain, and press the TVE toolbar System Editor button

(already done in the above subsection).

(2) Press the TVE toolbar System Editor button again to open a second System

Editor window.

(3) In the second System Editor window, go to the menu bar and execute the

sequence File - Open - Browse.

(4) Navigate to your wavetrain\wtlib\ directory.

(5) Open the file WtLib.tsd.

You will then see a set of six icons representing "component sub-libraries", as

shown in the following figure: .

Figure: The WaveTrain component library, in WaveTrain Ver. 2007 and earlier

--------------------

All WaveTrain versions:

The above WaveTrain component libraries contain many different kinds of components (optical, electronic, and mathematical processing functions) that you need to model a wide variety of optical systems. The

WtLib library is the core WaveTrain library, while the other elements of the master library (equivalently, AllLibs) set contain components added to WaveTrain in more recent versions of WaveTrain. In the next section of this tutorial, you will descend into one or more of the WtLib sub-libraries, browse to find desired components, then copy and paste components into your new system.The six sub-libraries of the core

WtLib library are:Maximum allowed number of TVE System Editor windows

Above you opened two separate System Editor windows. In general, TVE allows you to open a maximum of three separate System Editor windows, which you can use for purposes of system inspection and editing.

Nomenclature note

In some places in the WaveTrain program suite and documentation, the "System Editor" may be called the "Block Diagram Editor (BDE)".

Copy components from one System Editor window to another

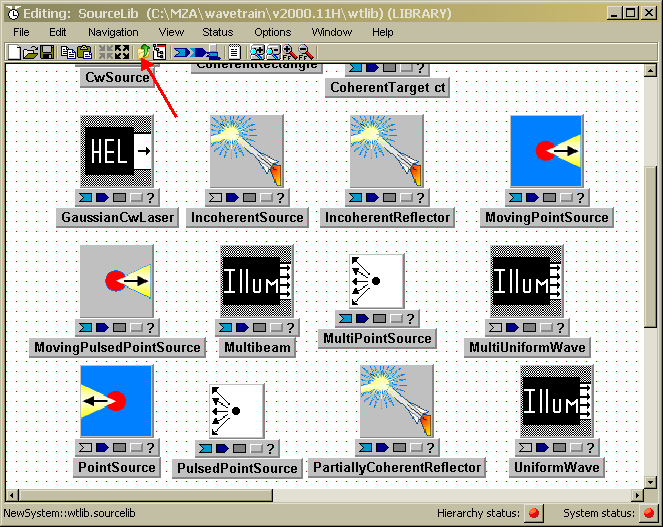

Now you will begin constructing your new WaveTrain system, by copying components from the WtLib library into your new system window. The order in which you copy the components make no difference, but it seems logical to start with a source. In the above System Editor window that contains the six sub-libraries, double-left-click the icon entitled SourceLib. This causes you to "descend" into the sub-library, so that you now see (in the same window) a variety of individual WaveTrain source modules (components) which have been collected for convenience into the sub-library. The window should look similar to the picture below, although the exact arrangement of the icons may differ. Note that in the picture below, the window size chosen for the illustration hides some of the individual components.

Figure: Contents of SourceLib (a sub-library of WtLib)

Note the toolbar button that is marked with the red arrow in the above figure. This button has Windows' standard "up-directory" icon: in the System Editor context, this signifies going up one level in a system hierarchy. If you left-click that button, you will return to the level that shows the six sub-libraries. Alternatively, you can go up one level by right-clicking on blank space in the editor window, then selecting "up one level" from the context menu. Double-left-click again on the SourceLib icon to descend again.

Now begin your system construction by copying the

PointSource component into

your blank new system window. The steps are:

(1) Find PointSource in the SourceLib

window.

Select PointSource

by moving the cursor over the icon and left-clicking. Notice this outlines

the icon in red.

(2) Use the window's menu bar to execute Edit - Copy PointSource.

(3) Move to the other System Editor window where you are building the new

system. In that window, go to the menu bar and execute Edit - Paste

PointSource.

(4) When first pasted, the icon may be jammed into the upper left

hand corner of the System Editor window. By left-clicking the icon and dragging it,

you can move it to any desired location within the window.

To select and paste additional components, simply repeat the copy-paste procedure for the additional components. In order to follow along with the illustrations and exercises in the remainder of this chapter, you should now copy and paste the remaining components in the following picture (you are building the same system as in the WtDemo system that was shown in the quick tour):

To find the components, you will want to access the

additional sub-libraries OpticsLib,

AtmosLib, and

SensorLib:

Component

Nomenclature: "components" in WaveTrain may also be called "subsystems", or "modules".

Appearance of the component icons

Do not be concerned if your component icons look somewhat different than the ones in the above figure: the icon pictures have occasionally changed during WaveTrain development cycles. However, the names of the systems in the title bars at the bottom of the icons should be identical! In fact, the icon pictures come in various flavors and color schemes, as well as various orientations. Later we explain how to modify the icons for aesthetic reasons, if desired.

The icon pictures should be interpreted loosely, and not taken too literally. For example, in the above picture the IncomingSplitter has the splitting interface oriented in the "wrong" direction for sending light to the SimpleFieldSensor. Such icon details have no functional effect whatsoever in the WaveTrain interface: it is only the connections that you will make between component blocks that determine where the light goes. Any component icon picture could be replaced by an arbitrary new picture with no effect whatsoever on system function.

Deleting a component

If you make a mistake, or are just experimenting, you can remove a component from your system by selecting it (left-click on the icon), and using the window menu sequence Edit - Delete. Alternatively, after selecting, right-click to get a context-sensitive menu that also presents a Delete option.

Alternate copy-paste procedures

As usual in graphical interfaces, there are alternate procedures available for copying and pasting. For example, once you have selected a component by left-clicking, then instead of using Edit - Copy from the menu bar, you can immediately right-click and obtain a context-sensitive menu that also offers the Copy command. Then, to paste the copied component into the new system window, you can right-click on blank space in the new window and obtain a context-sensitive menu that offers the Paste option.

It is also allowed to select more than one component at a time, using <Ctrl>-left-click to select the second, third, etc. components. The selected ones can all be copied at once and then pasted at once. When first pasted, they may all appear in the upper-left corner, stacked on top of each other. You can then drag them individually to their desired locations.

Saving systems, opening existing systems

Saving

At this stage, save your new

system before proceeding to the next stage of system construction. A system

can be saved at any stage; no particular level of completeness needs to be

achieved before saving . To save the new system, the steps are:

(1) In the System Editor window menu bar, left-click File - Save As.

(2) Navigate to any directory where you want to store your system.

(It would be preferable to not use any tempus or WaveTrain

subdirectories).

(3) Specify a system (file) name of your choice, and press Save.

The file extension .tsd (tsd = "tempus system definition") will be

automatically appended to the name you specify.

In older versions of WaveTrain, File - Save As may first place you in a WaveTrain library directory: you do not want to save your system there. Wherever you choose to save it, it is usually good practice to store each new WaveTrain system in its own directory, because the process of building the system, the runset and then executing the runset will generate numerous files associated with one system.

Once the new system has been saved for the first time, subsequent changes can be saved by using just File - Save.

Opening an existing system

Suppose that you wish to work with a system that has been

previously saved, but is currently not open in a System Editor window. To

do this, the steps are:

(1) Open a new System Editor window from the TVE toolbar.

(2) In the editor window menu bar, left-click File - Open - Browse.

(3) In the resulting directory window, navigate to the directory where the

system of interest is stored.

(4) Select the .tsd file that bears the system name you want, and

press Open (or just double-left-click the .tsd file name).

(*) As an alternative to File - Open - Browse, the menu sequence File - Recent systems is also useful: this presents you a quick list of systems that you've recently opened.

The present TVE environment allows a maximum of three separate System Editor windows to be open at one time.

Next steps in building your system

After you have copied or added the desired subsystems into your new or working system window, you will need to set their parameters, and connect them together. In general, these steps can be done in either order.

Component parameters, inputs and outputs

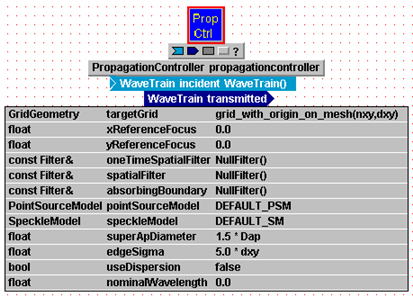

After you copy or add components (subsystems), you will need to assign values to their "parameters", and possibly to some of their "inputs". These two terms are used in a specialized sense in WaveTrain: they are both inputs in a generic sense, but the distinction is that "parameters" are fixed in time, whereas "inputs" may or may not change with time.

A typical component "parameter" might be an aperture diameter, a source power, or a mesh spacing.

A typical component "input" might be a "wavetrain" (WaveTrain's data structure that carries the optical field information). The data in a wavetrain incident on a component usually changes as the simulation time advances. On the other hand, timing parameters that define sensor exposures are also "inputs", even though most of these do not change with time.

A typical component "output" might be a wavetrain, or a time-integrated sensor exposure map.

A component may have any number of parameters, inputs and outputs. A parameter must be assigned a value. An input usually receives its value by being connected to the output of another block, but sometimes an input is assigned a value explicitly. Outputs are usually connected to the inputs of other components; in some cases, outputs are not graphically connected to anything, but may just be recorded. These options will become clearer as you continue with the construction of the tutorial system.

In the subsequent sections of this introductory tutorial, you will see many examples of parameters, inputs, and outputs. We will refer to them without quotes from now on; it should be clear from the context whether "input" is meant in the specialized WaveTrain or the generic sense.

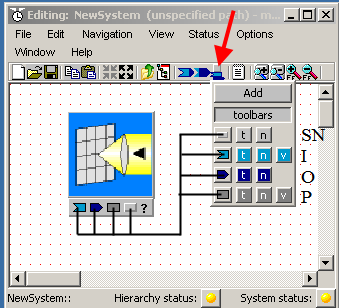

Display/hide graphical elements

Before actually setting any parameters or connecting inputs and outputs, you must become familiar with certain mechanics of the graphical user interface (GUI) related to displaying and hiding of the component graphical elements. Notice that, in the copy-paste operations you performed above, the component (subsystem) icons consisted of a picture, a miniature toolbar beneath the picture, and a component name bar below that.

Displaying/hiding the components parameters, inputs and outputs

Due to the finite display space available, it is

frequently useful (or necessary) to hide various graphical elements. Of

course to initially set the parameters and to connect the inputs and outputs,

these items must be displayed.

To

display or hide, you will use one of the toolbars buttons in the System Editor

window, and also the miniature toolbars located underneath the component icons.

The master control for displaying and hiding is the toolbar button indicated by

the red arrow in the picture at right. In the new system window in which

you have been assembling your system, left-click the indicated button.

That pulls down the palette which you see in the picture at right. This

palette contains six rows of buttons: for now we are just interested in the last

four rows, each of which consists of three or four small buttons. The

first of these rows is labeled "SN" in the picture, and controls display of

System Names. The second row, labeled "I" in the picture, controls display

of Inputs. The third row, labeled "O", controls display of Outputs.

The fourth row, labeled "P", controls display of Parameters.

To

display or hide, you will use one of the toolbars buttons in the System Editor

window, and also the miniature toolbars located underneath the component icons.

The master control for displaying and hiding is the toolbar button indicated by

the red arrow in the picture at right. In the new system window in which

you have been assembling your system, left-click the indicated button.

That pulls down the palette which you see in the picture at right. This

palette contains six rows of buttons: for now we are just interested in the last

four rows, each of which consists of three or four small buttons. The

first of these rows is labeled "SN" in the picture, and controls display of

System Names. The second row, labeled "I" in the picture, controls display

of Inputs. The third row, labeled "O", controls display of Outputs.

The fourth row, labeled "P", controls display of Parameters.

Global display/hide: As an initial exercise, after pulling down the palette, left-click on the leftmost "I" button: in your new system window, you should now see that the inputs of all your components are now displayed. The display may be messy because the icons are too close to each other for present purposes. Grab one or two of the icons and move them to see everything for those icons. The palette buttons are all "toggles", so click the same button again to hide the inputs. The leftmost button in the "O" row serves the same function for component outputs, and the leftmost "P" button serves that function for the component parameters. Any combination of I,O, and P may be displayed. Finally, note that the leftmost "SN" button allows you to turn off/on the display of component (system) names; these take less room, so usually there is not much point in hiding the names.

Individual component display/hide: Instead of

applying the display/hide toggles to all components at once, it is often

very helpful to apply the toggles to one component at a time. To do this,

simply select (left-click) the icon of interest, and then press the

above-discussed palette buttons.

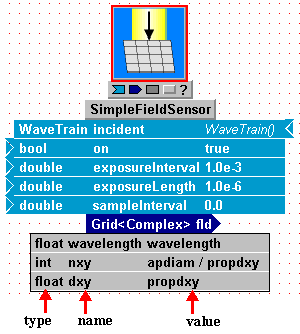

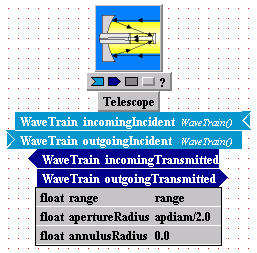

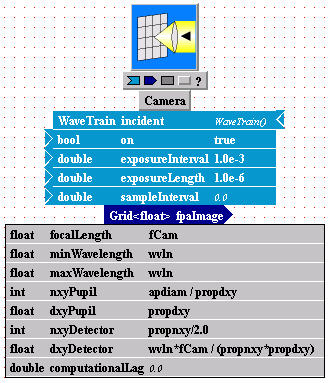

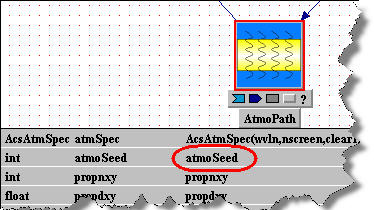

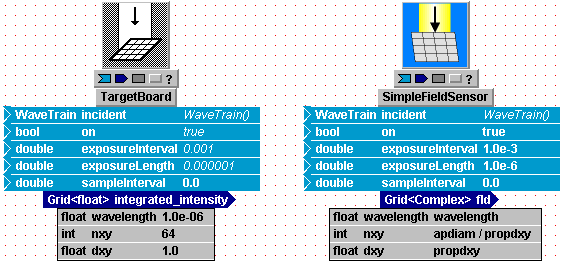

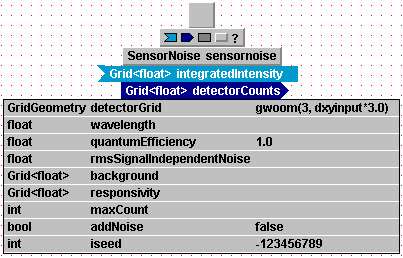

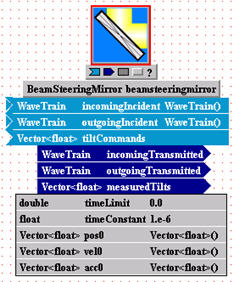

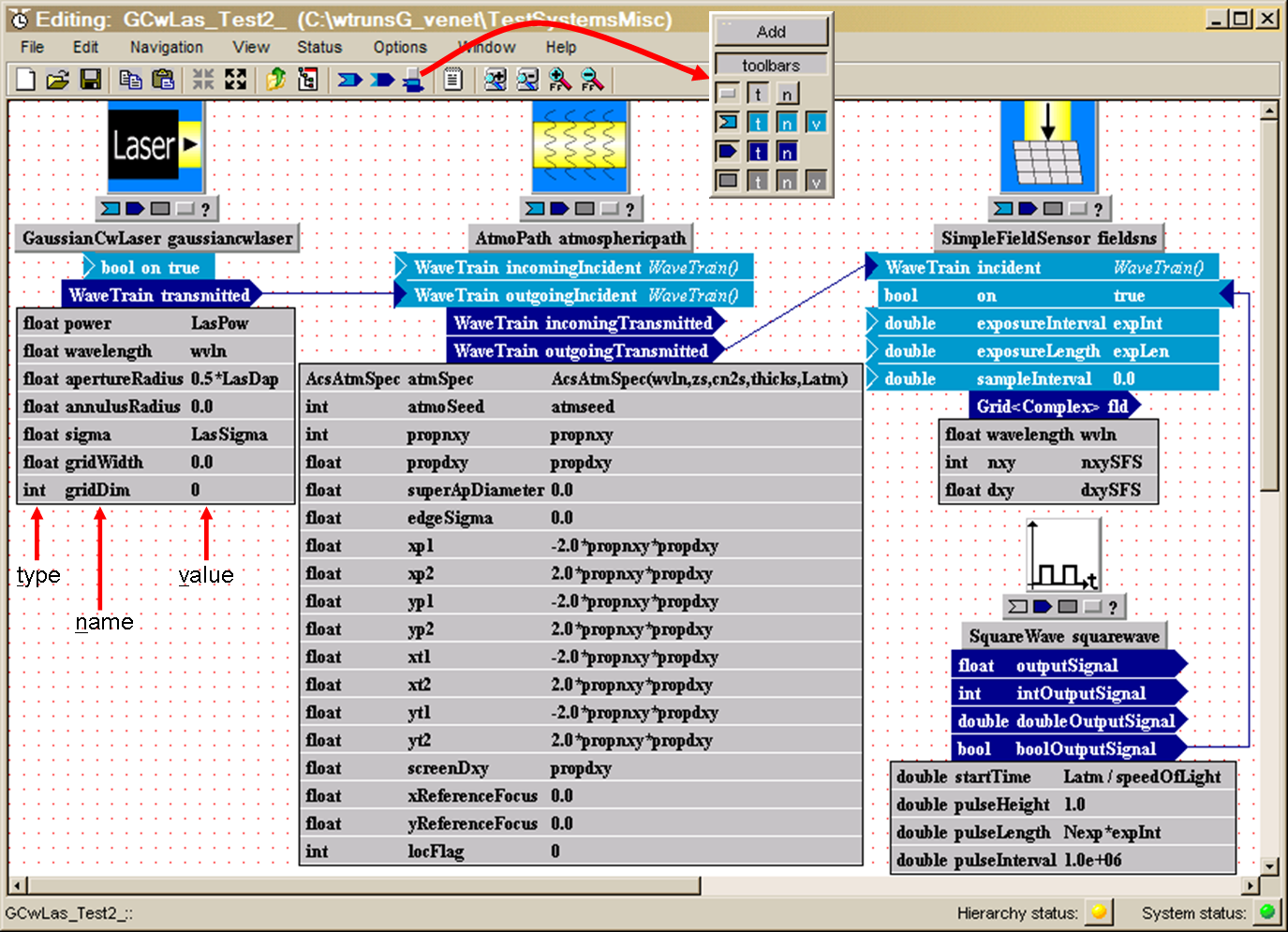

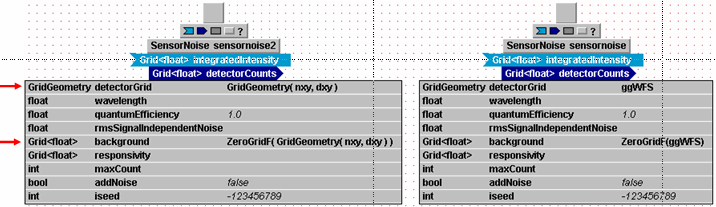

Displaying/hiding type, name, value elements: Next, consider the palette buttons labeled "t" (= type), "n" (= name), "v" (= value). Each of the SN, I, O, P rows in the previous figure has such buttons. As an exercise, select the SimpleFieldSensor icon in your new system, and use the palette buttons in the leftmost column to display inputs, outputs, and parameters. When all elements t, n, and v are displayed, your component should look like the illustration at right. Note that inputs (light blue fields) and parameters (light grey fields) have three columns: type, name, and value, as indicated by the red arrows. Outputs have only two columns: t and n. You can use the subsidiary palette buttons labeled t, n, and v to display/hide any combination of individual elements of the I, O, P fields. Until you become more familiar with the significance of the elements, it is probably best to display all elements or none.

Miniature toolbar under component icon: From inspection of the above picture, you will see a miniature toolbar directly below the component icon, above the component name. The mini-toolbar has symbols that look exactly like the buttons in the left column of the palette. Once you have used the palette to set the display/hide properties of the t, n, v elements to your liking, then you can more quickly display/hide the SN, I, O, P rows by just left-clicking the mini-toolbar buttons. Note that clicking the mini-toolbar buttons preserves the t, n, v settings that you have chosen with the palette.

The component name fields: We have not yet

said much above about the SN row of the palette, and the display fields that it

controls. The system (component) name fields have a different function

than the I, O, P fields, although the display/hide features are controlled in

exactly the same way using the palette and the mini-toolbar. The example

at right shows one of the

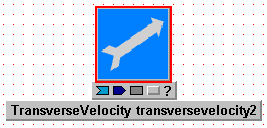

TransverseVelocity components from the new system that

you have assembled.  We have used the palette buttons to display both t and n for the SN row, and we

have hidden the I, O, P rows. The t (type) field in the SN bar corresponds

to what is technically called the "C++ class name" in the WaveTrain code.

The text in this field, "TransverseVelocity", is

not user-editable. The n (name)

field in the SN bar is also assigned by default when you insert the component

into your system. However, the text in the n field is arbitrarily

adjustable by the user. There is usually no need to make any

adjustment to the default assignment, but this name can be changed to conform to

user preference. A significant point is illustrated by the example at

right. Note that the System Editor assigned the default name

"transversevelocity2".

That is because we inserted two

TransverseVelocity

components into our new system, and this was the second "instance" of the

TransverseVelocity

type that we inserted.

You are allowed to change the name and suffix number to anything that seems

meaningful or convenient to you, by clicking on the name and editing. The

numbering need not be consecutive, or you can just use different names and no

numerical suffix: the only requirement is that multiple instances of a

"class" must have distinct names.

We have used the palette buttons to display both t and n for the SN row, and we

have hidden the I, O, P rows. The t (type) field in the SN bar corresponds

to what is technically called the "C++ class name" in the WaveTrain code.

The text in this field, "TransverseVelocity", is

not user-editable. The n (name)

field in the SN bar is also assigned by default when you insert the component

into your system. However, the text in the n field is arbitrarily

adjustable by the user. There is usually no need to make any

adjustment to the default assignment, but this name can be changed to conform to

user preference. A significant point is illustrated by the example at

right. Note that the System Editor assigned the default name

"transversevelocity2".

That is because we inserted two

TransverseVelocity

components into our new system, and this was the second "instance" of the

TransverseVelocity

type that we inserted.

You are allowed to change the name and suffix number to anything that seems

meaningful or convenient to you, by clicking on the name and editing. The

numbering need not be consecutive, or you can just use different names and no

numerical suffix: the only requirement is that multiple instances of a

"class" must have distinct names.

Now that you have mastered the displaying and hiding of

display elements, you are ready to set the parameters.

In your new system, select the SimpleFieldSensor component, and display all the I, O, and P

elements to follow along. After you've copied SimpleFieldSensor from WtLib,

and you've displayed all the elements, it

should look like the illustration at right.

Note: Depending on the age of your WaveTrain version, there may be

some differences in the contents of the rightmost "value" column.

We now want to enter desired values in the value fields (rightmost column) of the parameter block (light grey) and the input block (light blue). In WaveTrain, we use the term "setting expression" to denote the expression entered in a value field. Note that in this example all the value fields are already filled in with some default setting expressions. This will not be the case with all components: sometimes the value fields are initially blank. In either case, these initial settings are usually not what you want in your new system.

Note that some setting expressions in the picture are simple numbers ("1.0e-3"), some are symbols ("wavelength", "propdxy"), some are boolean expressions ("true"), and some are algebraic expressions ("apdiam / propdxy"). After you finish the introductory tutorial, you can obtain full details on the expressions and functions that are allowed in setting expressions by referring to the detail chapter on Data Entry.

The simplest method of assigning a setting expression is

to enter a number. If you enter a number and press <return>, you are

completely done with that parameter. However, this is often

undesirable for two possible reasons:

(a) You may want to link the value to that of another parameter value.

(b) You may want to vary the value later at the level of the WaveTrain

Runset Editor (to be discussed later in this chapter).

To handle issues (a) or (b), you must assign a symbolic name or algebraic

expression as the setting expression.

To change or initially assign a setting expression, left-click in the value field of interest and begin typing. A few rudimentary text-editing features are available, e.g. text selection and copy-paste via <ctrl>-C and <ctrl>-V. Press <Enter> to complete the edit. You will now practice entry of setting expressions by changing or accepting all the value fields of SimpleFieldSensor:

Enter setting expressions for SimpleFieldSensor:

(1) Parameter name "wavelength":

(*) Currently, the value of this parameter is

also "wavelength". For practice, enter the new setting expression "wvln".

Note: you are allowed to use the same symbol for a parameter setting as

the parameter name, or a different one: the default here was the same, but

you just changed the value to "wvln".

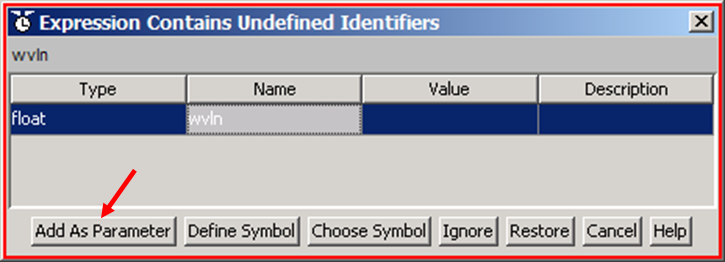

(*) When you pressed <Enter>, the System Editor

popped up another window, entitled "Expression Contains Undefined Identifiers",

as shown below. Press the button "Add as Parameter" to register the symbol

"wvln" that appears in the "name" column:

CAUTION: symbols inside the WaveTrain program are case-sensitive. E.g., "Wvln" is different than "wvln", etc.

(2) Parameter name "nxy":

nxy and dxy define the space mesh on which SimpleFieldSensor will report its

results. Let's just accept the default expression "apdiam / propdxy" that

is already present:

(*) Left-click in the value field, and simply

press <Enter>

(*) Note that the System Editor again pops up the

"Undefined Identifiers" window: press "Add as Parameter" twice to register

the symbols "apdiam" and "propdxy".

The two parameters "apdiam" and "propdxy" will refer to key properties of two other components, namely Telescope and AtmoPath, respectively.

(3) Parameter name "dxy":

Again, let's just accept the default expression:

(*) Left-click in the value field, and simply

press <Enter>

(*) This time, the System Editor does not

produce the

"Undefined Identifiers" window: that is because the symbol "propdxy"

has already been registered in the previous step, when you defined the setting

expression for parameter name "nxy".

In the

SimpleFieldSensor

module, the last four inputs (in addition to the parameters) also require

setting expressions. On the other hand, the input of type "WaveTrain" will

receive its input by being connected to the output of another block, so we enter

nothing in the value field of that first input bar:

(4) Input name "on":

(*) Left-click in the value field, and simply

press <Enter>

(*) The boolean symbol "true", which you've just

accepted, is already a defined in the WaveTrain code, so the "Undefined

Identifiers" window did not pop up.

The "true" setting means that the sensor's first exposure window will start at simulation time t=0.

(5, 6) Input names "exposureInterval",

"exposureLength":

These names mean exactly what they say: they define, respectively, the interval

between start of successive exposure windows, and the length of each exposure

window. All the basic WaveTrain sensors are time-integrating sensors,

although later you may encounter sensors that directly report power or

irradiance.

(*) Accept the default numerical values for these

two timing inputs.

Numerical values in the WaveTrain program are in MKS

units, unless explicitly specified otherwise: all time quantities are in

seconds.

(7) Input name "sampleInterval":

This name may be a little confusing. It allows you to perform multiple

WaveTrain propagations during each exposureLength window.

(*) Leave it set at 0.0 for this tutorial:

that means that the optical field at the sensor will be computed once during

each exposureLength.

After completing the introductory tutorial, you can consult the detail section Sensor Timing and Triggering for further information about timing options.

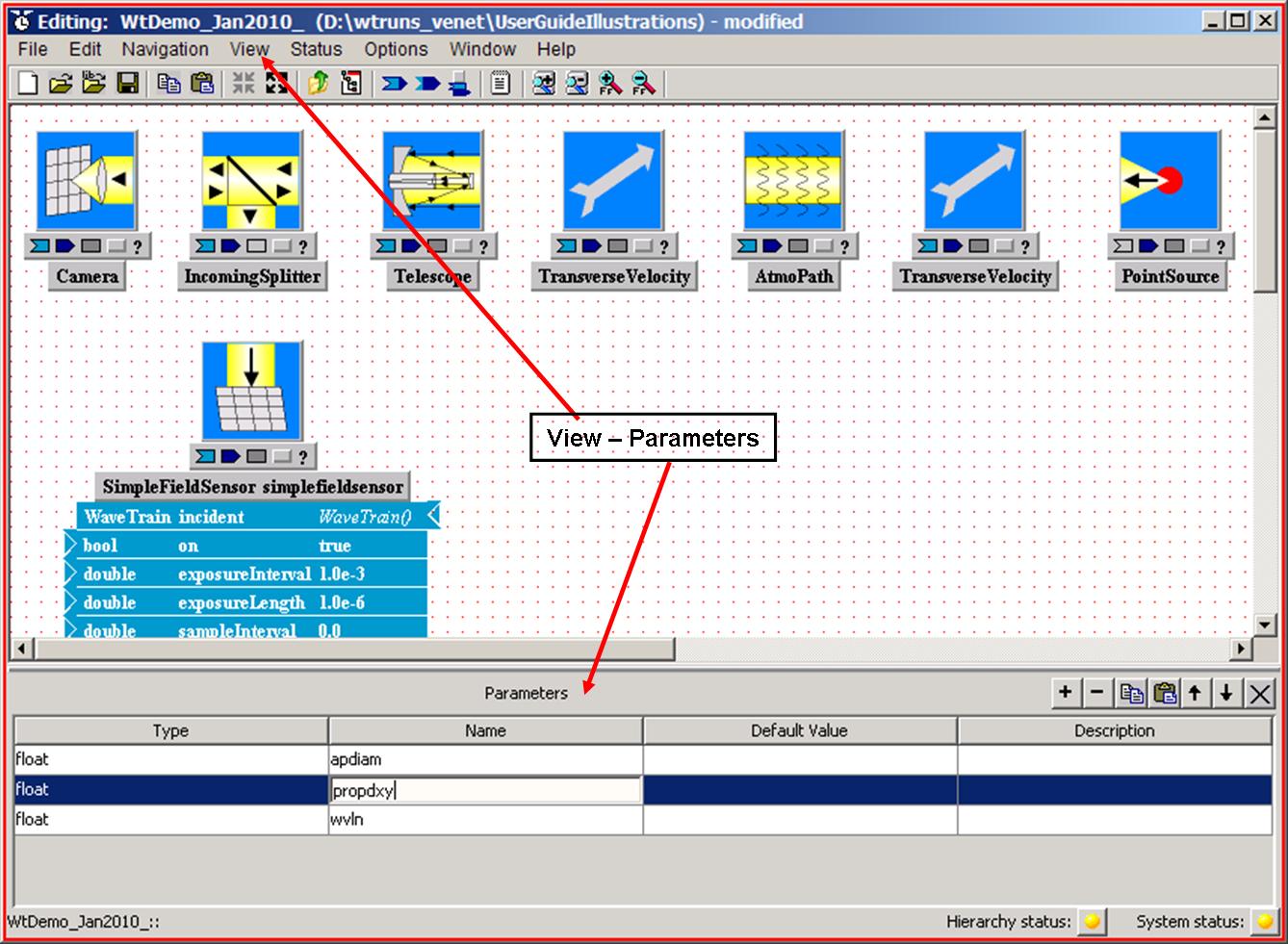

Parameters sub-window:

Before proceeding with the setting expressions for other components, you should now become aware of a sub-window of the System Editor. Using the System Editor View menu, execute the menu commands View - Parameters. This makes visible a sub-window within the System Editor, which we call the Parameters Panel, as shown in the following picture:

The Parameters Panel contains a listing of all the symbols that you have

defined so far: "wvln", "apdiam", and "propdxy" all appear. If

undesired symbols still appear in the list (e.g., from some experimentation you

might have done), you can (and should) delete those by left-clicking in their

row, and pressing the

![]() button at the top right of the Parameters Panel.

button at the top right of the Parameters Panel.

For now, let's ignore the "Default value" and "Description" columns in the Parameters Panel. You can toggle the Parameters Panel display on and off, as convenient, using View - Parameters.

Help pages for individual WaveTrain components

In this tutorial, we guide you by giving you valid setting expressions to

enter for all parameters and inputs. Naturally, when you eventually do

this on your own, you will need reminders of the meaning of, and valid possible

settings for, the many component parameter and input names. This information

can be obtained for each component by left-clicking the question-mark button,

![]() , at

the bottom right of any component icon.

, at

the bottom right of any component icon.

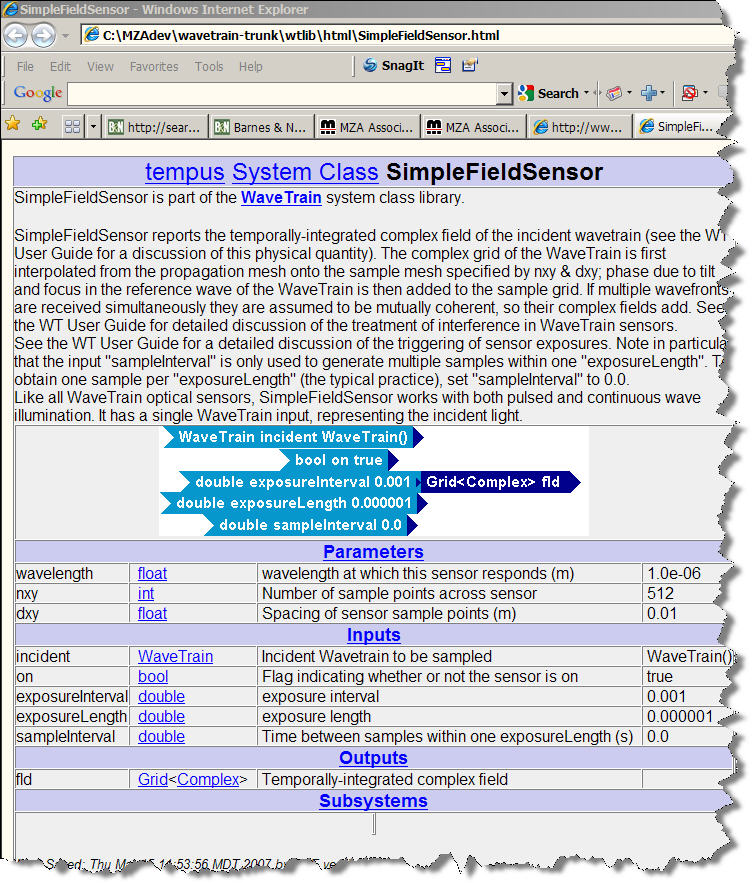

Try this, for example, with the SimpleFieldSensor component whose settings you entered above. You will see that a HTML help page opens in your web browser: this page contains a summary description of the component's function, and short physical definitions of each parameter, input and output. Sometimes these descriptions may be terse, and you may still have to consult sections of the User Guide for interpretation. Nevertheless, the component help page is the place to start.

The picture below shows the help page that you should see for SimpleFieldSensor: .

Enter setting expressions for remaining components:

At this point, you have completed the setting expressions for the SimpleFieldSensor module, and you should now enter the setting expressions for the remaining components as follows.

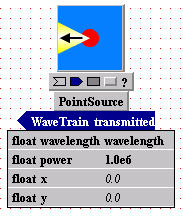

Enter setting expressions for PointSource:

Initially, PointSource copied from

Wtlib has the default values shown below.

(*) Since we decided above to represent the

propagation wavelength by the symbol "wvln", you should change the symbol

"wavelength" in the value field to "wvln".

(*) Accept the default numerical values of the

other parameters as they are.

(Note that the PointSource parameter named "power" is poorly named: this parameter is actually the Watts/steradian produced by PointSource).

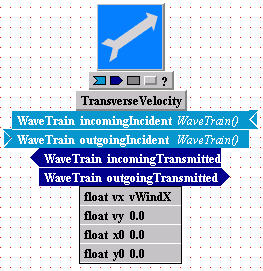

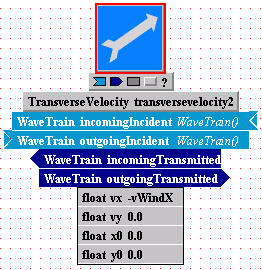

Enter setting expressions for TransverseVelocity (first instance named transversevelocity, and second instance named transversevelocity2):

You will assign the parameter values to represent a uniform atmospheric

wind speed, transverse to the propagation path, with stationary source and

receiver. (Picture the three velocities with respect to an earth frame,

which is considered inertial).

(*) Change the default settings to match those

in the pictures below:

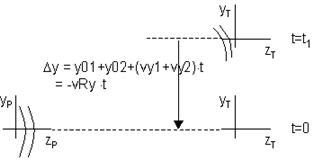

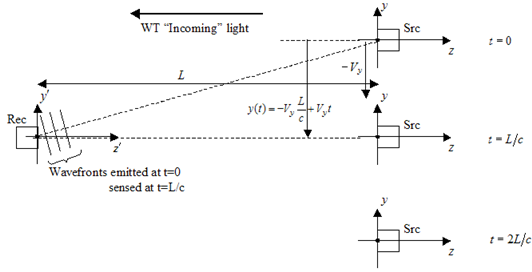

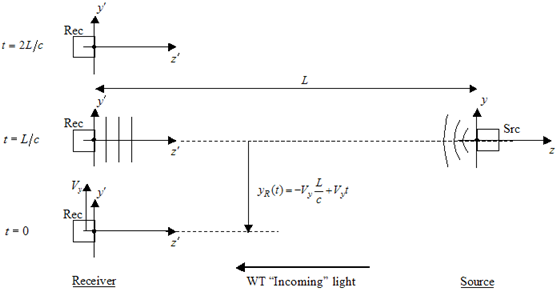

Notice that we have entered "vWindX" in one motion module and "- vWindX" in the other. To understand the physical meaning of these settings (after completing the present tutorial), you can consult the User Guide detail sections for further information about the specification of transverse displacement and transverse motion in WaveTrain.

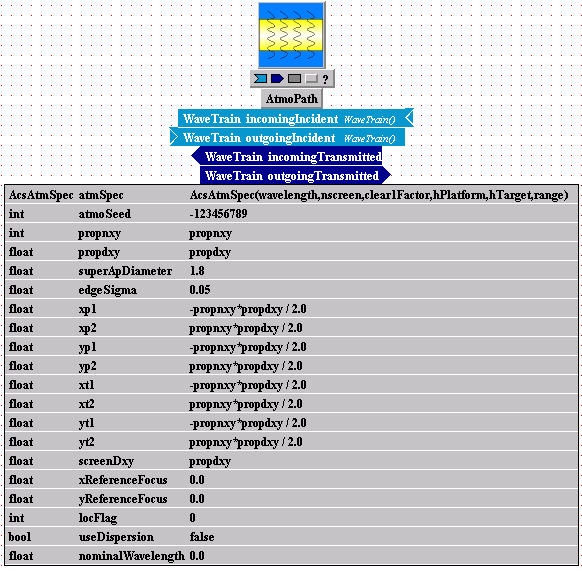

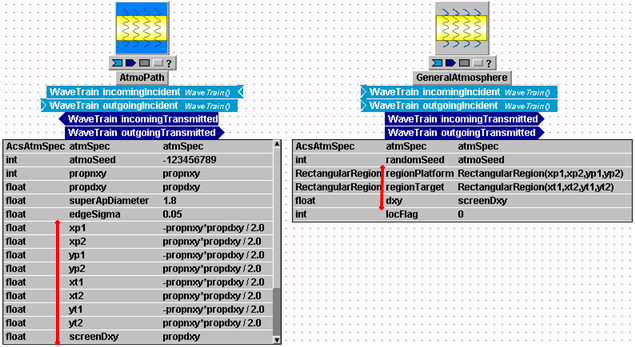

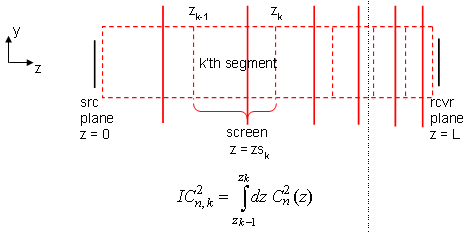

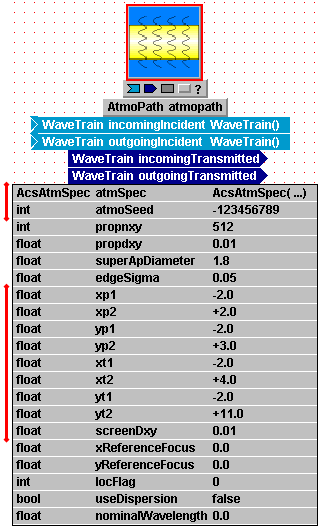

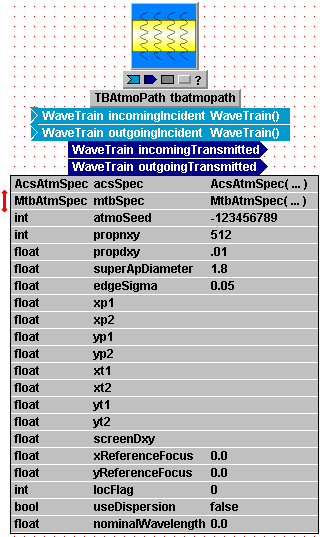

Enter setting expressions for AtmoPath:

This is a complicated component, with many parameters. AtmoPath contains specifications for both the Fourier propagation mesh and the turbulence phase screen parameters. For present tutorial purposes, we will enter settings here without explaining all the details. The details can be learned later by consulting various sections of the Modeling Details section of the User Guide.

Parameter name "atmSpec":

This parameter contains most of the settings that define the atmospheric

turbulence along the propagation path. The setting expression in this

value field is something new compared with the components you worked with above:

it is a special function, "AcsAtmSpec(...)",

that is part of the WaveTrain code. You will now set the arguments of this

function:

(*) Left-click in the value field, and change

"wavelength" to "wvln", to accord with your previous symbol definitions.

(*) Press <Enter>, and you will once again see

the "Undefined Identifiers" window. Left-click the "Add As Parameter"

button five times, to register the remaining default symbols that appear in the

argument list, namely "nscreen, clear1Factor, hPlatform, hTarget, range".

We will briefly discuss the physical significance of these symbols later in the

tutorial, when we finally assign them numerical values.

Parameter name "propnxy":

Parameter names "propnxy" and "propdxy" define the size and spacing of the mesh

that WaveTrain will use for its Fourier propagations. Obviously, these are

critical specifications.

(*) Left-click in the value field, press

"<Enter>, and "Add As Parameter" to accept the default symbol "propnxy".

(You already defined the symbol "propdxy" in a previous component").

Parameter names "xp1" through "yt2", and "screenDxy":

These parameters define the dimensions and mesh spacing of the turbulence phase

screens. The symbols in the respective setting expressions have all been

registered previously, so you need do nothing here.

Remaining parameter names:

The setting expressions are either numerical values, or symbols previously

defined. You will accept all these for now, so no further action is

required to complete the AtmoPath

settings.

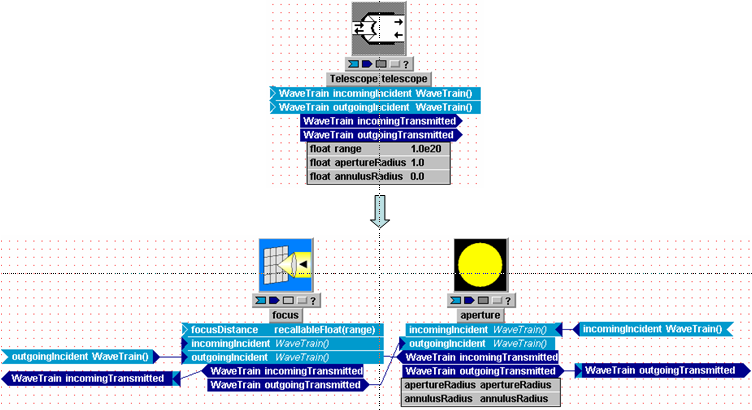

Enter setting expressions for Telescope:

This component performs two functions: (a) it applies a binary aperture (in general, annular), and (b) it undoes the wavefront curvature acquired due to propagation from a point source to the telescope. Function (b) works together with the Camera module that follows.

Parameter name "range":

Here you simply enter the distance to an OBJECT plane whose image you

want to generate in the focal plane of a subsequent Camera module:

(*) The desired setting expression in the value

field, "range", is already present by default, and you have previously

registered this symbol. No further action is required.

Parameter names "apertureRadius" and "annulusRadius":

(*) These already have the desired setting

expressions entered by default, and the symbol "apdiam" has been registered

previously. No further action is required.

Note the mixed nomenclature: "apertureRadius" signifies the outer radius

of the annular aperture, while "annulusRadius" signifies the inner

radius, all in MKS units (i.e., meters).

No setting expressions required for IncomingSplitter

This component makes a copy of the incident wavefront and retransmits it into two separate optical paths. Note that the wavefront is duplicated: it is NOT split in energy.

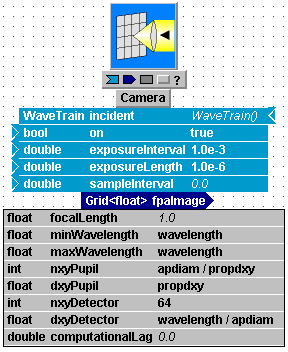

Enter setting expressions for Camera:

Patience,

you're almost done!(*) Edit the setting expressions in Camera's parameter value fields to match the picture below.

(*) One CAUTION: the parameters named "nxyPupil"

and "dxyPupil" unfortunately have quite misleading names. Unless you

explicitly use an esoteric feature of WaveTrain ("wavesharing"), these parameter

values are NOT used in the calculation. The aperture which controls the

diffractive spreading in

Camera's image is the

Telescope aperture that you specified above.

(*) The setting expression you entered for the

parameter named "dxyDetector" exploits the full resolution available from

Execute the menu commands File - Save

Of course, you can do this at any time during the above work, but make sure you do so before proceeding to the next stage of construction.

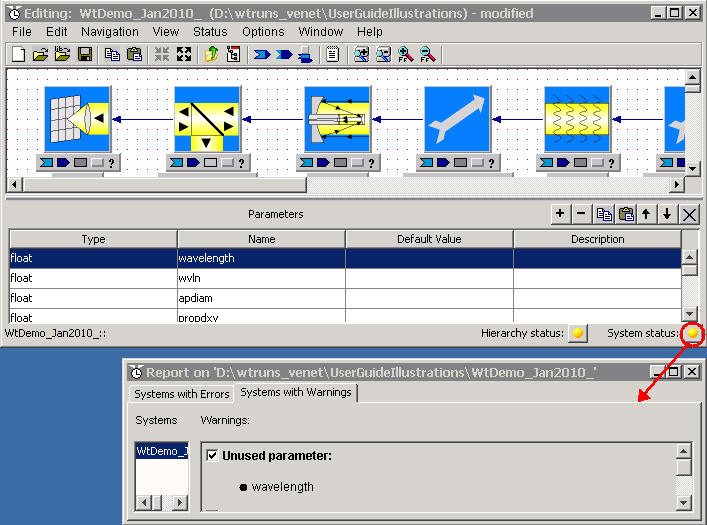

You have now completed the entry of setting expressions for all your component parameters. At this point, you should verify that the settings are complete, and furthermore, syntactically consistent. The mechanism provided by WaveTrain to perform this check is the pair of status bulbs at the bottom right of the System Editor window

Status bulbs

At the bottom right of the System Editor window, you will

see the two status indicators

![]() and

and

![]() . The two colored bulbs can be either red, yellow or green, indicating various

levels of missing or inconsistent information in the parameter and input setting

expressions.

The "System status" bulb refers to the system level currently displayed in the Editor

window: this is the one of present interest.

. The two colored bulbs can be either red, yellow or green, indicating various

levels of missing or inconsistent information in the parameter and input setting

expressions.

The "System status" bulb refers to the system level currently displayed in the Editor

window: this is the one of present interest.

If the status bulbs are not green, it means that one or more problems have been detected by the TVE. In the system you are constructing, left-click on the "System status" bulb, which should be green at this point. A message box will pop up, telling you that no problems are detected at this time.

Now that you have completed the setting expressions for all the components of

your tutorial system, the next step is to connect the components together.

This step is very simple: to connect the output of one component to the input

of another component, you must:

(a) Make sure you display the inputs

and outputs of the components you want to connect.

(b) Place the cursor on the arrowhead of a dark-blue output bar: the

arrowhead should turn red when you are over the sensitive region.

(c) Left-click and drag to the connection triangle at the end of the

desired light-blue input bar,

and release: a connection line will appear.

Carry out steps (a)-(c) to make the connections shown in the following figure:

In producing the above layout, we've used several GUI features to improve

the visual presentation:

(a) You can spread out the component icons to give more room, either by

dragging the individual icons, or by pressing the "unpack"( ![]() ) and "pack" (

) and "pack" (![]() ) buttons in the

System Editor toolbar.

) buttons in the

System Editor toolbar.

(b) You can introduce bends in a connecting line, by left-clicking on a

line and dragging (right-click and "delete vertex" to undo).

(c) The connection triangles or arrowheads of inputs and outputs may be

flipped from one side to the other of the colored bars. To

do so, place the cursor on a colored bar and double-left-click.

Alternatively, place the cursor on a bar and right-click to get a

context-sensitive menu, then "Flip".

(d) The horizontal and vertical layout of components is completely

immaterial to their functionality: only the connections control which way

light is going. (A later Guide section explains

details of coordinate and

direction conventions).

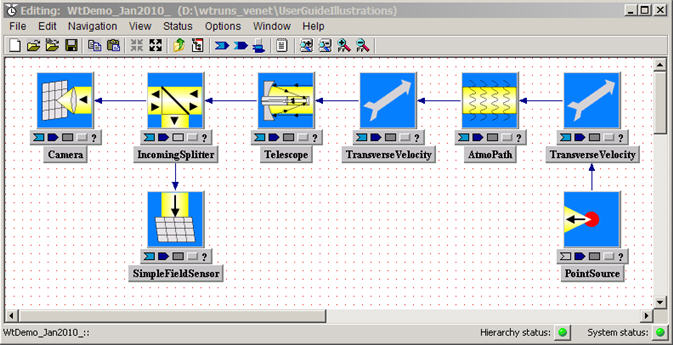

After all components are connected and setting expressions defined, you may want to hide all inputs,outputs and parmaeters, leaving just the connecting lines showing. In that case, your completed system could be compacted as follows:

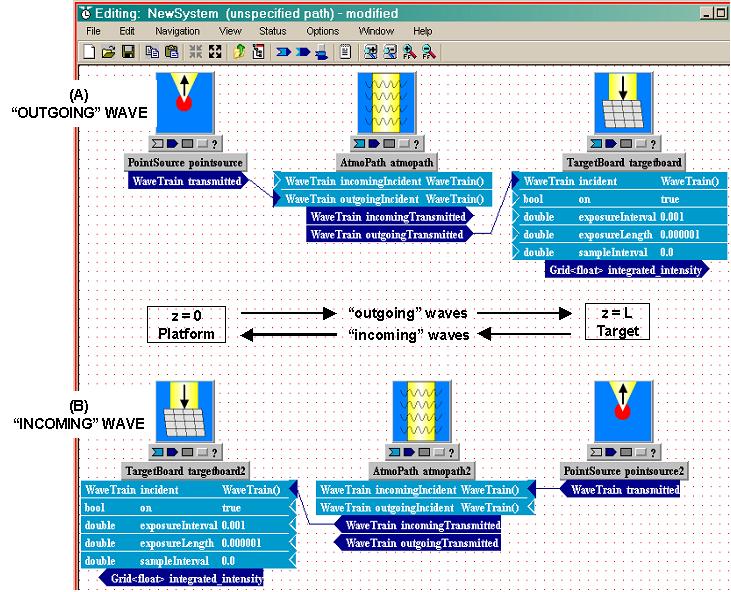

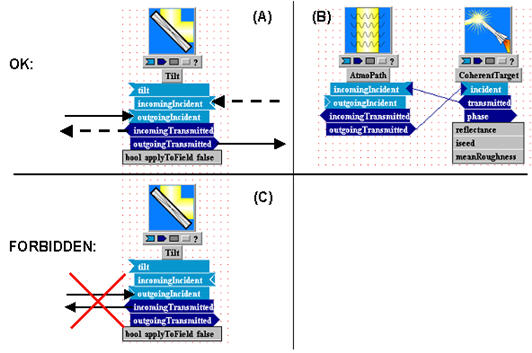

Note that all your connecting lines are single-headed arrows: that is because light is only going in one direction in this tutorial system. In many systems, you will have light going in both directions ("incoming" and "outgoing", in WaveTrain nomenclature).

At this stage, you can always redisplay the inputs, outputs, or parameters of individual components as needed.

Execute the menu commands File - Save

Of course you can do this at any time during the above work, but make sure you do so before proceeding to the next stage of construction.

Create a new run set for the WaveTrain system

Now that you have completed assembly of your WaveTrain system in the System Editor, you must create a "run set" for it. After the run set is created, you will be able to compile and run the simulation with two button clicks.

To create a run set for your system model, go to the

System Editor file menu and

(*) execute File - New - Runset;

(*) in the input box that pops up, enter a name of your

choice (say, "A") for your new run set;

(*) press "OK".

In general, the run set name can be constructed from any combination of letters, numerals, and the

underscore character. The data eventually

generated by running your simulation will be stored in an output file

named according to the pattern "SystemNameRunRunSetNameK.trf",

where

SystemName

= name of the WaveTrain system (user-specified)

Run = prefix inserted by the WaveTrain code

RunSetName

= name of the run set (user-specified)

K = a

sequential numerical index assigned by the WaveTrain code

.trf = extension designating a specially-formatted data file (pronounced "turf" by

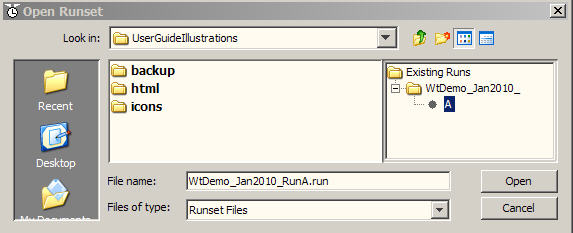

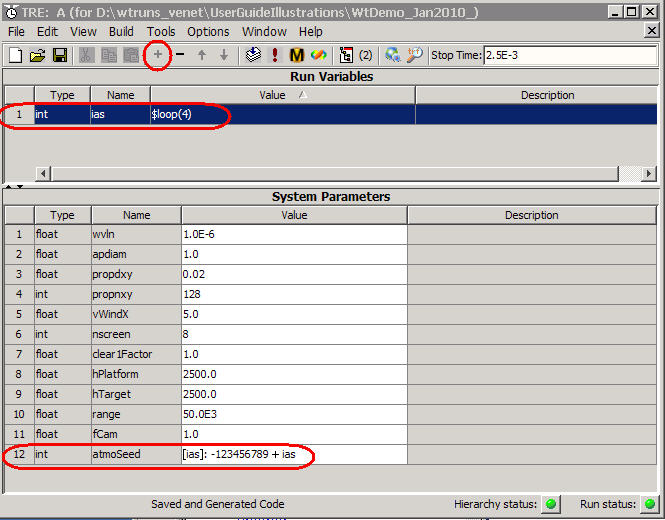

WaveTrain initiates).

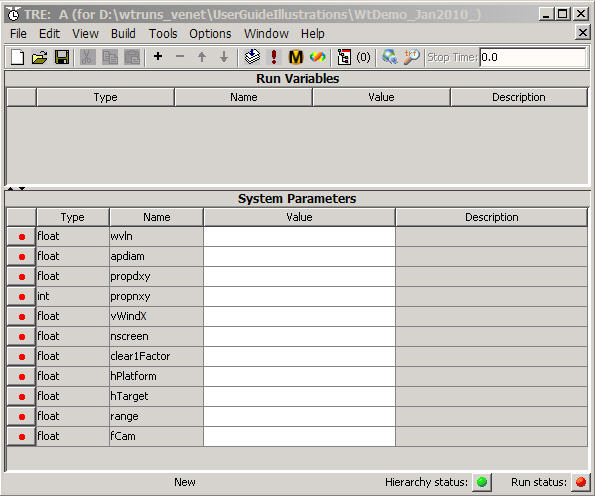

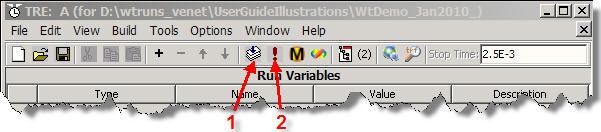

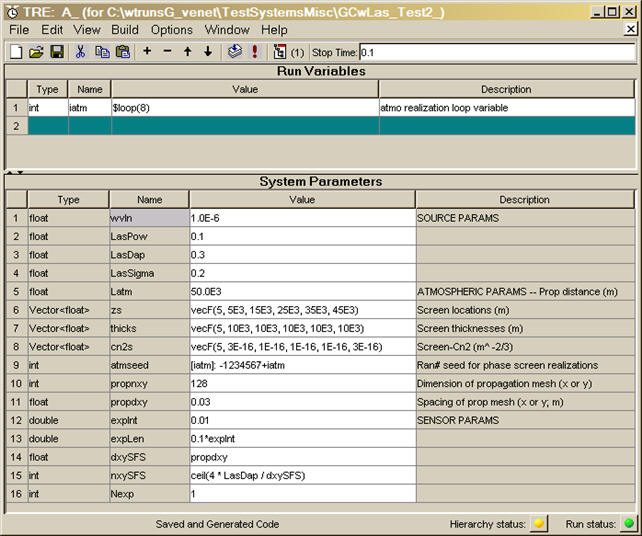

When you pressed OK to create the new run set, you obtained a new editor window that has the appearance shown below. This is called the Run Set Editor window. The acronym "TRE" in the window title bar stands for the full name "tempus Run Set Editor". In the title bar of the window, you will see the name you assigned to the run set, followed by (in parentheses) the directory path and name of the system with which it is associated.

Notice there are two panels in the Run Set Editor:

(a) the Run Variables panel, currently blank;

(b) the System Parameters panel: notice that the parameter names

that appear here are precisely those symbols that you defined when you entered

them previously in the component setting expressions of the system editor

window. You can verify that the name list here exactly matches the

comprehensive name list that appears when you View - Parameters in the

System Editor window.

You must now do the following four things in the Run Set Editor

before you can finally run your simulation:

(a) Assign numerical values (or file sources) to the System Parameters

(b) Possibly define some Run Variables

(c) Enter a simulation Stop Time

(d) Specify one or more variables for recording.

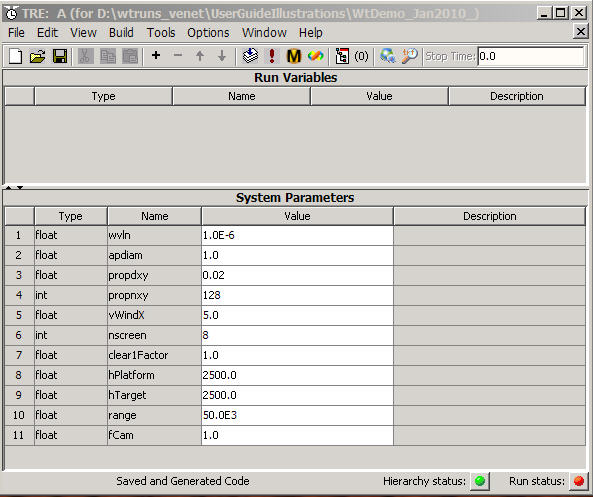

Assign numerical values to System Parameters and Run Variables:

(*) Left-click and type in the "Value" fields of the

System Parameters panel, and assign the values shown in the modified picture

below.

(*) Press <Enter> to accept a value setting before moving to another entry

field

Physical units: inputs, outputs and parameters in WaveTrain are in MKS units. One caution on units: phase or OPD inputs/outputs can be a little tricky, in that different components may assume meters or radians, which will be specified by the help page for the individual components.

(*) Parameter name "nscreen" needs a special

adjustment:

This adjustment is required due to a deficiency in the WaveTrain interface.

We include this bit of ugliness in the tutorial because it will appear in

practically every atmospheric propagation model, and you must get used to it.

Notice that in the original Run Set window above, and in the

System Editor window upon which the run set is based, the editor assigned the

data type of "float" to the symbol "nscreen". But this is incorrect,

because "nscreen" represents the integer number of phase screens in the

atmospheric turbulence model. If you go back to the

AtmoPath component

in the System Editor, you can see that you first entered "nscreen" as an

argument in a function, AcsAtmSpec(..., nscreen, ...). In that kind of

setting expression, and only that kind, the editor is unable to determine the

correct data type for the function arguments, and just labels them all as

"float" type. You must manually correct by doing the following:

(i) In the Parameters Panel of the System Editor,

left-click in the "Type" field of the "nscreen" parameter, and replace "float"

by "int". Press <Enter>.

(ii) Still in the System Editor, execute the menu commands

File - Save.

(iii) In the pop-up box that now appears, click "Yes" to update the

open runset. You will see that the "Type" of "nscreens" in the Run Set

window has now updated to "int".

After the above manipulations, your runset should look like the following figure:

Execute the menu commands File - Save:

In the Run Set Editor window, execute the menu commands File - Save. Proceed with saving despite the resulting "incomplete" warning.

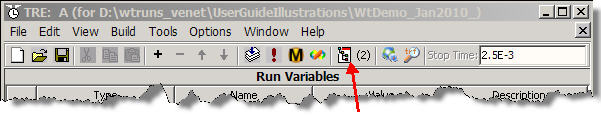

Enter a simulation Stop Time:

In the upper right of the Run Set Editor, find the box called "Stop Time". All WaveTrain simulations start at t=0, and run until the designated Stop Time (in seconds). Whether and when any calculations are done during t=[0.0, Stop Time] depends solely on the sensor timing parameters that you defined in the sensor components of you system. In the above system, you've included two sensors,

SimpleFieldSensor and Camera, and you assigned them identical timing parameters. Specifically, you set the sensors to take exposures starting at intervals of 1 msec, with an exposure length being 1 msec.(*) Enter the value 2.5E-3 (seconds) in

the Stop Time box.

This will produce three exposures, because the first one starts at t=0.

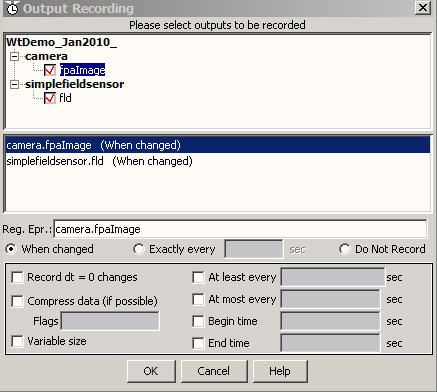

Specify variables for recording:

When you execute the simulation, WaveTrain records no results by default. You must specify precisely which outputs you want to record.

(*) In the Run Set Editor, press the Recorded Outputs button in the toolbar, where indicated by the red arrow in the following figure:

A new window will pop up, entitled "Output Recording" as shown below, which contains a listing of all the component outputs that are available for recording.

(*) Click on both check boxes: this will cause

recording of the camera and field sensor output maps (one map for each exposure

window in each sensor)

(*) Note that below the check boxes, the radio button "When changed" is

selected". This is the usual choice; leave all other boxes

unchecked.

(*) Press "OK" to accept your selections and to close this window.

Notice that the "Output Recording" button in the Run Set Editor toolbar now

has the anotation "(2)", indicating that two output variables have been selected

for recording.

Notice also that the status bulbs at the bottom of the Run Set Editor are now

both green, implying that all required settings have been filled in, insofar as

the Editor can determine.

Execute the menu commands File - Save:

In the Run Set Editor window, execute the menu commands File - Save.

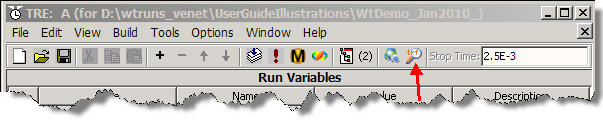

(*) Press the "Compile and link" button in the Run Set Editor (see red arrow #1 in the screenshot below).

A Windows console window will appear, and

some messages will be generated that are useful for debugging in case there are

still setup errors in your system or runset. (The messages will be useful

once you acquire some more experience with WaveTrain). The tutorial system

you've constructed should have no errors at this point, so the console messages

should conclude with:

" Created executable "SysNameRunRunName.exe"

with optimization (Release version).

Make completed successfully

Press any key to continue . . ."

Press any key to close the console window.

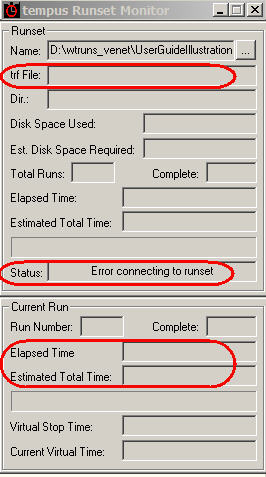

(*) Press the "Execute simulation" button in the Run Set Editor (see red arrow #2 in the screenshot above). As a result, two things will happen:

(i) Another Windows console window

will appear,

containing messages that show the progress of the simulation run. These

messages are not particularly informative to WaveTrain users. Your

tutorial system system should complete execution in a few seconds at most:

you should then see the message "execution complete"

in the console window.

(ii) A separate small diagnostic window, entitled "tempus Runset Monitor",

will appear. This window is shown at right.

Your tutorial system will most likely finish too fast for the Runset Monitor to

latch on to the process and begin acting. As a result

the Monitor will report that there was an "Error connecting to runset",

as shown in the picture at right in the second red-circled field. Just

ignore this, and close the Monitor window.

If you are executing a run set that

requires more time, the Runset Monitor may provide some useful diagnostics.

First, the Monitor will report the name of the *.trf file that WaveTrain

created (in particular, the auto-generated numerical index): see the first

red-circled field at right. Lower down in the Monitor, there is a panel

entitled "Current Run", which tells you the run

number currently in progress. Just below that, there are panels that

report the elapsed wall-clock time, and the estimated time remaining to complete

execution of the run set. The latter estimate is typically fairly

accurate, but is not foolproof, because its accuracy depends on the structure of

the looping calculations in the runset.

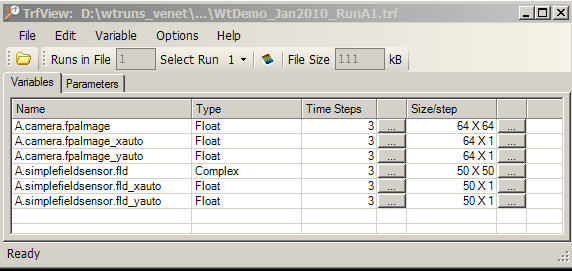

You will now use WaveTrain's TrfView viewer to inspect the recorded simulation results.

Start the TrfView viewer:

(*) Press the "TrfView" button in the Run Set Editor toolbar, where indicated by the red arrow in the screenshot below:

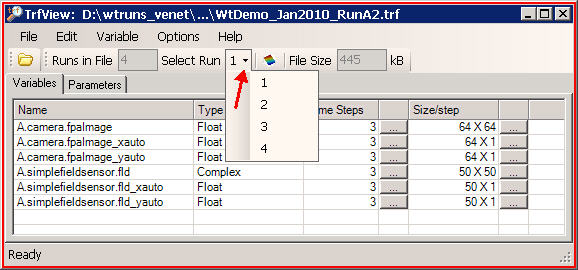

The preceding button press opens the TrfView viewer, and also locates and opens your most recently generated *.trf file. (This *.trf file contains your recorded simulation results). The main window of TrfView then appears, which will look as follows:

The "Name" column contains the names of all

the variables that you checked for recording in the "Ouput Recording" menu of

the Run Set Editor: note the presence of

"A.camera.fpaImage"

"A.simplefieldsensor.fld".

The "A" prefix is the run set name, which may differ depending on what you named

your run set.

(If you want to inspect data from a different *.trf file, you can go to the TrfView file menu and execute File - Open ... to navigate to and select a different *.trf file name. You can only inspect one *.trf file at a time).

Plot recorded data in TrfView:

(*) Right-click on variable name "A.simplefieldsensor.fld"

to get a context menu

(*) Click "Plot variable"

You should now see the following plot window:

This shows the amplitude (left panel) and

phase (right panel) of the complex field recorded by the SimpleFieldSensor

component. Key feature of the plot window are:

(i) The x and y axis scales are in sensor pixel units.

(ii) The phase of the complex field is in radians.

(iii) The amplitude units are a more complicated issue (read the detail sections

on units and sensors

for full discussion).

(iv) The button marked by the red arrow in the picture controls which

sensor exposure (which time step) you are plotting. Currently the window

is showing exposure "1 of 3". Press the button (and its companion buttons)

to cycle among the exposures. Note how the plot appears to (approximately)

translate as you move in time: this reflects the fact that the wind speed

setting in your system causes the phase screens to translate.

(v) The small panel labeled "Time", to the right of the exposure buttons,

tells you the exact simulation time at which the sensor exposure data became

available. In the system that you created, the end of the exposure windows

occurred at t = (0+1.0msec,

1.0msec+1.0msec,

2.0msec+1.0msec), i.e., at

t = (1.0msec, 1.001msec,

2.001msec). This is precisely what the "Time" panel reports as you cycle

among the exposures.

(*) Click and plot the variable "A.camera.fpaImage"

for further practice.

This variable is the temporally-integrated

irradiance (units of J/m2), in the camera focal plane.

(*) Magnify (zoom in on) a section of the plot, by left-clicking and

dragging to outline the section that you want to magnify. To undo the

zoom, right-click in the plot and select "Unzoom".

(*) New as of WaveTrain 2010A: In addition to the variables "A.camera.fpaImage" and "A.simplefieldsensor.fld", the TrfView main window pictured above also listed companion variables "..._xauto" and "..._yauto". These variables automatically record mesh geometry information.

The *.trf file itself:

When using TrfView, the viewer manages all

the opening, closing and reading of the *.trf data files. As you

become a more experienced WaveTrain user, you will want to deal with these files

in other ways than TrfView alone. For now, just verify that the trf file

appears in the same directory where you saved the WaveTrain system.

Remember that the trf file was named according to the pattern

"SystemNameRunRunSetNameK.trf"

In the present case, the suffix K=1 was assigned by WaveTrain, because it was

the first *.trf file having the specified system name and runset name in

the directory.

Suppose you now went on to change some entry values in the System and/or Run Set Editors, resaved your run set under the same name, and executed the simulation again. Then WaveTrain would create a new *.trf file with the K index incremented by 1. Thus, there is never any danger of WaveTrain overwriting previous *.trf files.

Congratulations!

You have completed the creation and running of your first complete WaveTrain simulation!

At this point, there are several additional

topics in WaveTrain system construction which you should work through as an

extension to the introductory tutorial. The following procedures are

typically needed, or at least desirable, even in simple WaveTrain systems:

(a) Inserting a loop variable in the Run Set editor

(b) Elevating parameters

(c) Changing the list order of System Parameters

(d) A few clean-up procedures

(e) Basic documentation using the "Description" fields in the Editors

Multiple run sets for a given system